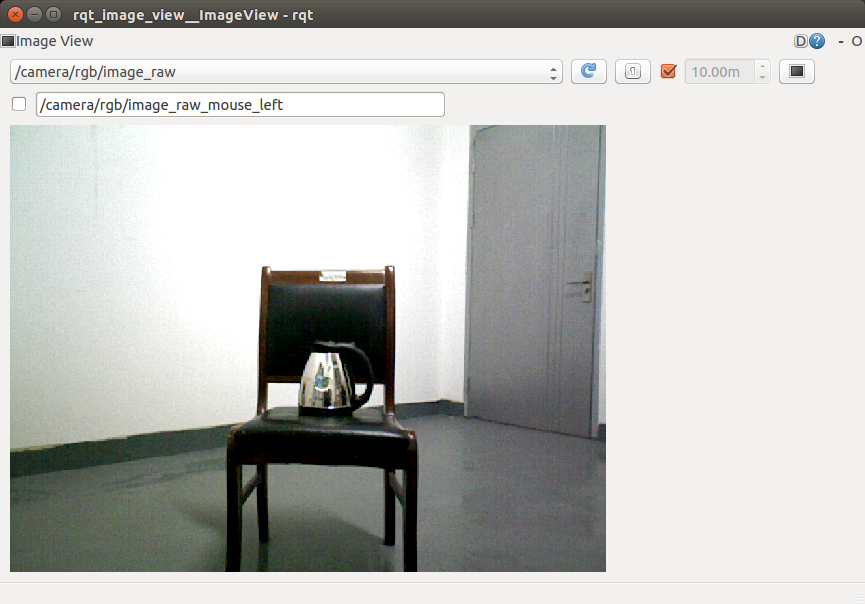

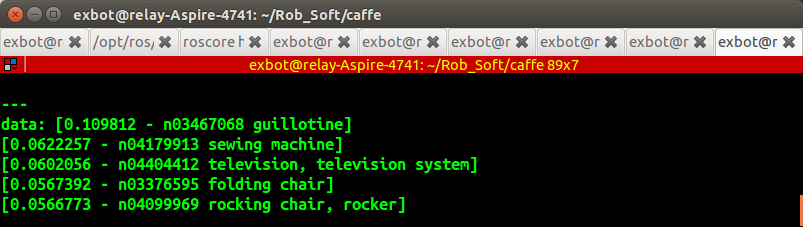

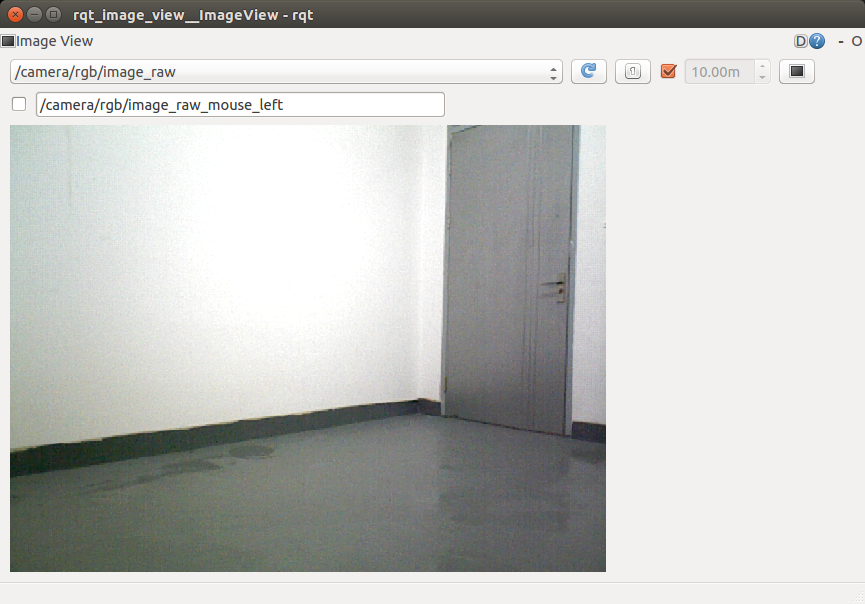

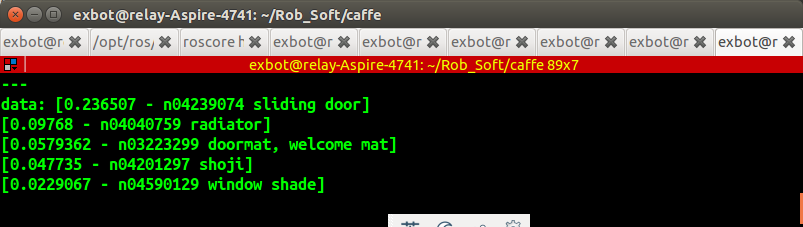

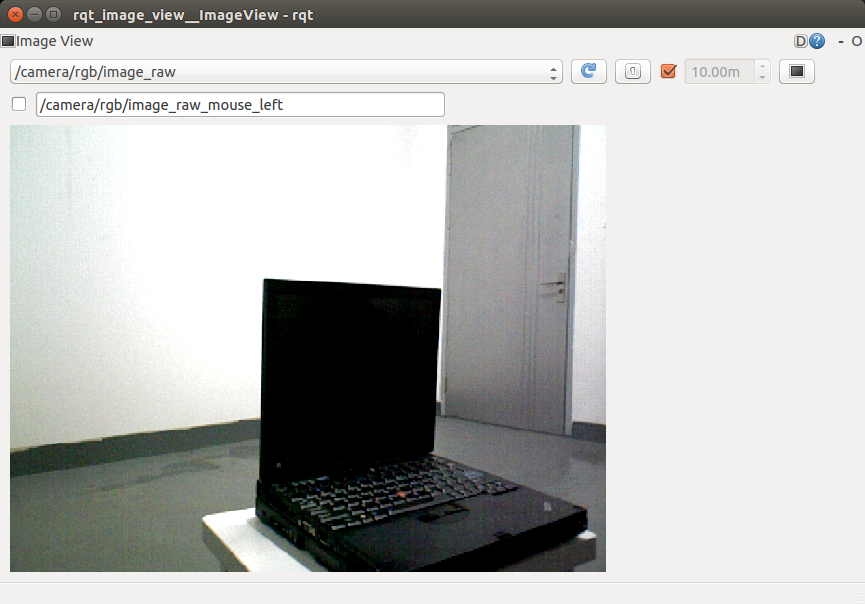

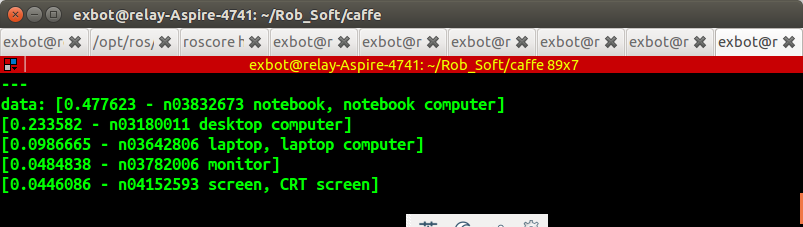

ROS + Caffe,这里以环境中物体识别为示例,机器人怎么知道环境里面有什么呢?

[0.0567392 - n03376595 folding chair]

[0.0566773 - n04099969 rocking chair, rocker]

[0.236507 - n04239074 sliding door]

[0.477623 - n03832673 notebook, notebook computer]

[0.233582 - n03180011 desktop computer]

caffe在ros中主题以概率方式推断物品,比如椅子,门和笔记本电脑。

如何实现?

首先,需要安装Berkeley Vision and Learning Center (BVLC) 的Caffe,在ubuntu 14.04 16.04上网上教程很多,

这里就不多说,只列出核心步骤:

最重要的就是看官网说明!!!----http://caffe.berkeleyvision.org/

$ sudo apt-get install libatlas-dev libatlas-base-dev

$ sudo apt-get install libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler

$ sudo apt-get install --no-install-recommends libboost-all-dev

$ sudo apt-get install libboost-all-dev

$ sudo apt-get install libgflags-dev libgoogle-glog-dev liblmdb-dev

在github下载最新版caffe:https://github.com/BVLC/caffe

注意库要配置好!可以支持opencv2和opencv3,但是如果配置不正确,呵呵,想象一下,编译和运行错乱是什么结果呢?

简单回答:Segmentation fault (core dump) !用opencv3编译,运行用opencv2,必然挂掉。CUDA ATLAS OpenCV等。

编译比较简单,测试也一般不会出问题。

cp Makefile.config.example Makefile.config

# Adjust Makefile.config (for example, if using Anaconda Python, or if cuDNN is desired)

make all

make test

make runtest

注意,如果只使用CPU,需要修改Makefile.config如下:

cd [CATKIN_WS]/src/ros_caffe/caffe

cp Makefile.config.example Makefile.config

$ vi Makefile.config

For CPU-only Caffe, uncomment CPU_ONLY := 1 in Makefile.config.

$ make all ; make install

编译完成后,测试一下;-) make runtest

ros_caffe示例:https://github.com/tzutalin/ros_caffe

注意caffe库位置:修改Caffe's include and lib 路径 in CMakeLists.txt。

set(CAFFE_INCLUDEDIR caffe/include caffe/install/include)

set(CAFFE_LINK_LIBRARAY caffe/lib)

$ cd [CATKIN_WS]

$ catkin_make

$ source ./devel/setup.bash

出错依据报错信息查找问题。ok,编译工作全部完成。

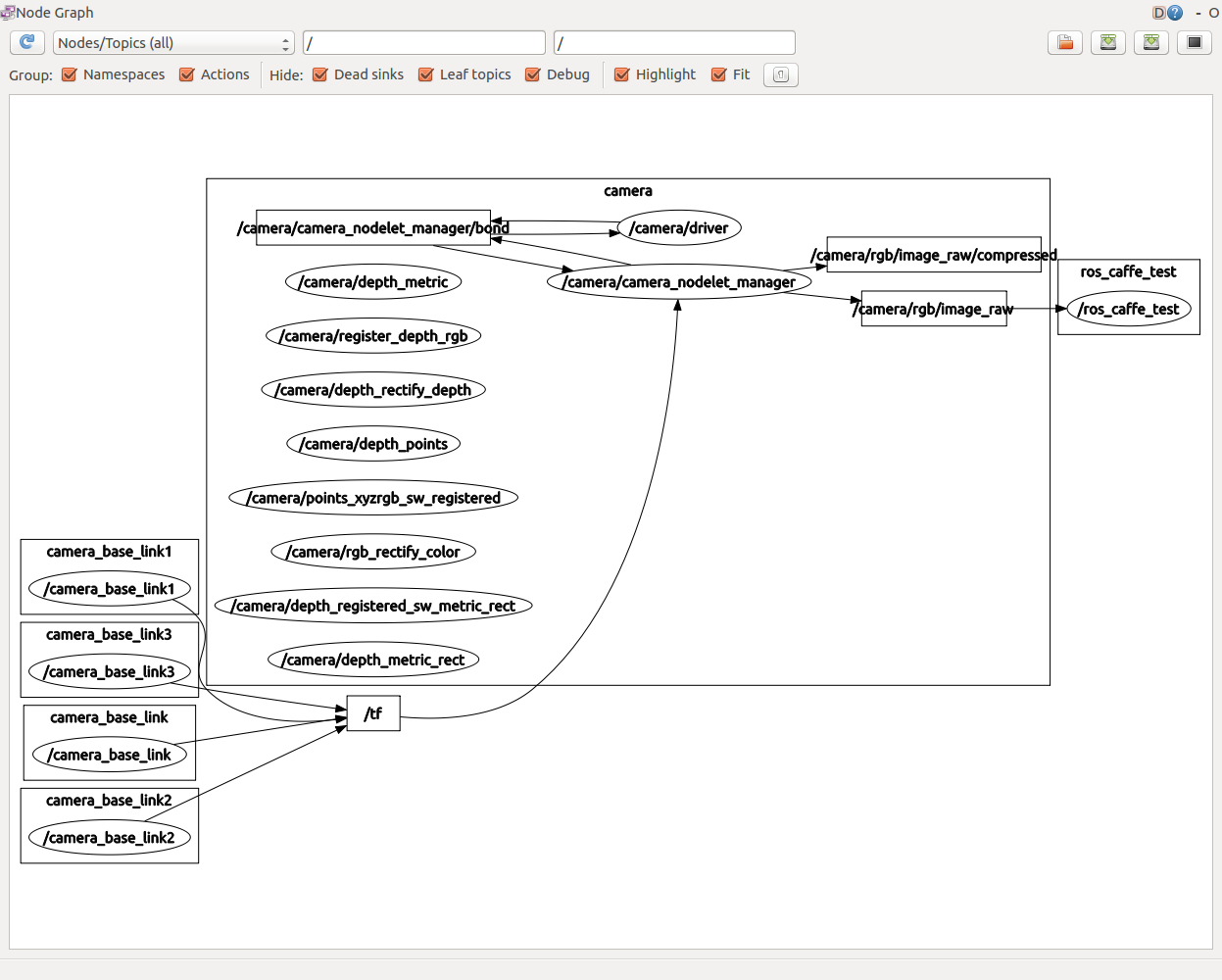

下载模型之后,直接在分别在终端运行:

$ roscore

$ roslaunch turtlebot_bringup 3dsensor.launch

或

$ roslaunch astra_launch astra.launch

$ rosrun ros_caffe ros_caffe_test

如果出现:

ok,全部完成。可以使用并出现识别结果。

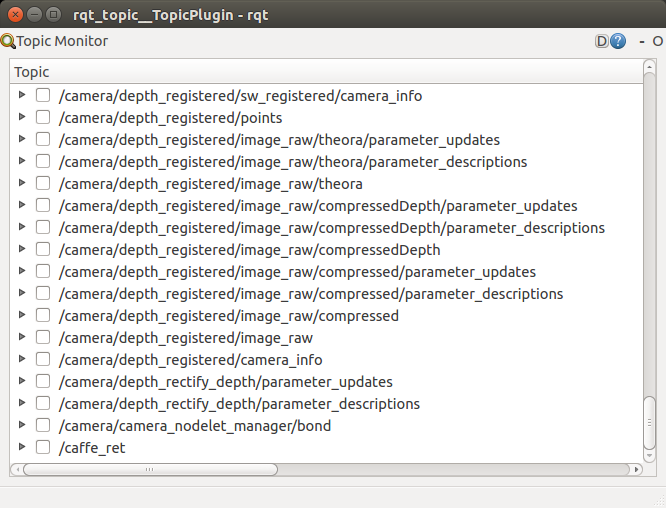

ROS Topics

Publish a topic after classifiction:

/caffe_ret

Receive an image :

/camera/rgb/image_raw

Classifier.h

#ifndef CLASSIFIER_H

#define CLASSIFIER_H

#include <iostream>

#include <vector>

#include <sstream>

#include <caffe/caffe.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

using namespace caffe;

using std::string;

/* Pair (label, confidence) representing a prediction. */

typedef std::pair<string, float> Prediction;

class Classifier {

public:

Classifier(const string& model_file,

const string& trained_file,

const string& mean_file,

const string& label_file);

std::vector<Prediction> Classify(const cv::Mat& img, int N = 5);

private:

void SetMean(const string& mean_file);

std::vector<float> Predict(const cv::Mat& img);

void WrapInputLayer(std::vector<cv::Mat>* input_channels);

void Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels);

private:

shared_ptr<Net<float> > net_;

cv::Size input_geometry_;

int num_channels_;

cv::Mat mean_;

std::vector<string> labels_;

};

Classifier::Classifier(const string& model_file,

const string& trained_file,

const string& mean_file,

const string& label_file) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif

/* Load the network. */

net_.reset(new Net<float>(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file);

Blob<float>* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

input_geometry_ = cv::Size(input_layer->width(), input_layer->height());

/* Load the binaryproto mean file. */

SetMean(mean_file);

/* Load labels. */

std::ifstream labels(label_file.c_str());

string line;

while (std::getline(labels, line))

labels_.push_back(string(line));

Blob<float>* output_layer = net_->output_blobs()[0];

}

static bool PairCompare(const std::pair<float, int>& lhs,

const std::pair<float, int>& rhs) {

return lhs.first > rhs.first;

}

/* Return the indices of the top N values of vector v. */

static std::vector<int> Argmax(const std::vector<float>& v, int N) {

std::vector<std::pair<float, int> > pairs;

for (size_t i = 0; i < v.size(); ++i)

pairs.push_back(std::make_pair(v[i], i));

std::partial_sort(pairs.begin(), pairs.begin() + N, pairs.end(), PairCompare);

std::vector<int> result;

for (int i = 0; i < N; ++i)

result.push_back(pairs[i].second);

return result;

}

/* Return the top N predictions. */

std::vector<Prediction> Classifier::Classify(const cv::Mat& img, int N) {

std::vector<float> output = Predict(img);

std::vector<int> maxN = Argmax(output, N);

std::vector<Prediction> predictions;

for (int i = 0; i < N; ++i) {

int idx = maxN[i];

predictions.push_back(std::make_pair(labels_[idx], output[idx]));

}

return predictions;

}

/* Load the mean file in binaryproto format. */

void Classifier::SetMean(const string& mean_file) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto);

/* Convert from BlobProto to Blob<float> */

Blob<float> mean_blob;

mean_blob.FromProto(blob_proto);

/* The format of the mean file is planar 32-bit float BGR or grayscale. */

std::vector<cv::Mat> channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

/* Extract an individual channel. */

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

}

/* Merge the separate channels into a single image. */

cv::Mat mean;

cv::merge(channels, mean);

/* Compute the global mean pixel value and create a mean image

* filled with this value. */

cv::Scalar channel_mean = cv::mean(mean);

mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

}

std::vector<float> Classifier::Predict(const cv::Mat& img) {

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_,

input_geometry_.height, input_geometry_.width);

/* Forward dimension change to all layers. */

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->ForwardPrefilled();

/* Copy the output layer to a std::vector */

Blob<float>* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector<float>(begin, end);

}

/* Wrap the input layer of the network in separate cv::Mat objects

* (one per channel). This way we save one memcpy operation and we

* don't need to rely on cudaMemcpy2D. The last preprocessing

* operation will write the separate channels directly to the input

* layer. */

void Classifier::WrapInputLayer(std::vector<cv::Mat>* input_channels) {

Blob<float>* input_layer = net_->input_blobs()[0];

int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

}

void Classifier::Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels) {

/* Convert the input image to the input image format of the network. */

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, CV_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, CV_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, CV_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, CV_GRAY2BGR);

else

sample = img;

cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample;

cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1);

cv::Mat sample_normalized;

cv::subtract(sample_float, mean_, sample_normalized);

/* This operation will write the separate BGR planes directly to the

* input layer of the network because it is wrapped by the cv::Mat

* objects in input_channels. */

cv::split(sample_normalized, *input_channels);

}

#endif

ros_caffe_test.cpp

#include <ros/ros.h>

#include <ros/package.h>

#include <std_msgs/String.h>

#include <image_transport/image_transport.h>

#include <cv_bridge/cv_bridge.h>

#include "Classifier.h"

const std::string RECEIVE_IMG_TOPIC_NAME = "camera/rgb/image_raw";

const std::string PUBLISH_RET_TOPIC_NAME = "/caffe_ret";

Classifier* classifier;

std::string model_path;

std::string weights_path;

std::string mean_file;

std::string label_file;

std::string image_path;

ros::Publisher gPublisher;

void publishRet(const std::vector<Prediction>& predictions);

void imageCallback(const sensor_msgs::ImageConstPtr& msg) {

try {

cv_bridge::CvImagePtr cv_ptr = cv_bridge::toCvCopy(msg, "bgr8");

//cv::imwrite("rgb.png", cv_ptr->image);

cv::Mat img = cv_ptr->image;

std::vector<Prediction> predictions = classifier->Classify(img);

publishRet(predictions);

} catch (cv_bridge::Exception& e) {

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

// TODO: Define a msg or create a service

// Try to receive : $rostopic echo /caffe_ret

void publishRet(const std::vector<Prediction>& predictions) {

std_msgs::String msg;

std::stringstream ss;

for (size_t i = 0; i < predictions.size(); ++i) {

Prediction p = predictions[i];

ss << "[" << p.second << " - " << p.first << "]" << std::endl;

}

msg.data = ss.str();

gPublisher.publish(msg);

}

int main(int argc, char **argv) {

ros::init(argc, argv, "ros_caffe_test");

ros::NodeHandle nh;

image_transport::ImageTransport it(nh);

// To receive an image from the topic, PUBLISH_RET_TOPIC_NAME

image_transport::Subscriber sub = it.subscribe(RECEIVE_IMG_TOPIC_NAME, 1, imageCallback);

gPublisher = nh.advertise<std_msgs::String>(PUBLISH_RET_TOPIC_NAME, 100);

const std::string ROOT_SAMPLE = ros::package::getPath("ros_caffe");

model_path = ROOT_SAMPLE + "/data/deploy.prototxt";

weights_path = ROOT_SAMPLE + "/data/bvlc_reference_caffenet.caffemodel";

mean_file = ROOT_SAMPLE + "/data/imagenet_mean.binaryproto";

label_file = ROOT_SAMPLE + "/data/synset_words.txt";

image_path = ROOT_SAMPLE + "/data/cat.jpg";

classifier = new Classifier(model_path, weights_path, mean_file, label_file);

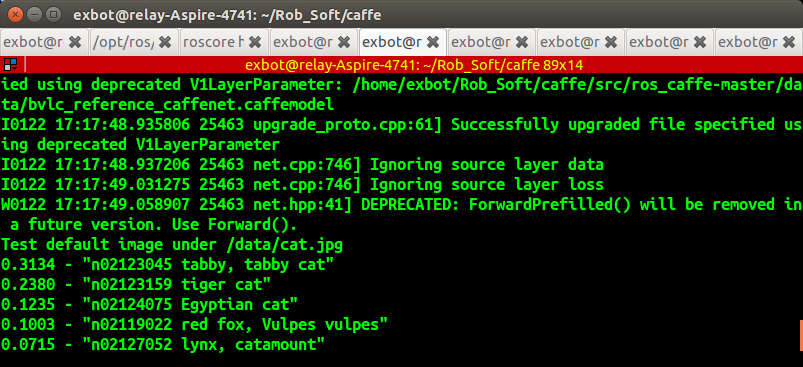

// Test data/cat.jpg

cv::Mat img = cv::imread(image_path, -1);

std::vector<Prediction> predictions = classifier->Classify(img);

/* Print the top N predictions. */

std::cout << "Test default image under /data/cat.jpg" << std::endl;

for (size_t i = 0; i < predictions.size(); ++i) {

Prediction p = predictions[i];

std::cout << std::fixed << std::setprecision(4) << p.second << " - \"" << p.first << "\"" << std::endl;

}

publishRet(predictions);

ros::spin();

delete classifier;

ros::shutdown();

return 0;

}

问题汇总:一般哪有那么顺利~~

I0122 16:50:22.870820 20921 upgrade_proto.cpp:44] Attempting to upgrade input file specified using deprecated transformation parameters: /home/exbot/Rob_Soft/caffe/src/ros_caffe-master/data/bvlc_reference_caffenet.caffemodel

I0122 16:50:22.870877 20921 upgrade_proto.cpp:47] Successfully upgraded file specified using deprecated data transformation parameters.

W0122 16:50:22.870887 20921 upgrade_proto.cpp:49] Note that future Caffe releases will only support transform_param messages for transformation fields.

I0122 16:50:22.870893 20921 upgrade_proto.cpp:53] Attempting to upgrade input file specified using deprecated V1LayerParameter: /home/exbot/Rob_Soft/caffe/src/ros_caffe-master/data/bvlc_reference_caffenet.caffemodel

I0122 16:50:23.219712 20921 upgrade_proto.cpp:61] Successfully upgraded file specified using deprecated V1LayerParameter

I0122 16:50:23.221462 20921 net.cpp:746] Ignoring source layer data

I0122 16:50:23.316156 20921 net.cpp:746] Ignoring source layer loss

Segmentation fault (core dumped)

如果出现,这是需要调试ROS节点,使用gdb:

$ ulimit -a

$ ulimit -c unlimited

$ echo 1 | sudo tee /proc/sys/kernel/core_uses_pid

如果查看出错信息:

$ gdb ros_caffe_test core.20921

GNU gdb (Ubuntu 7.7.1-0ubuntu5~14.04.2) 7.7.1

Copyright (C) 2014 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law. Type "show copying"

and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from ros_caffe_test...(no debugging symbols found)...done.

[New LWP 20921]

[New LWP 20934]

[New LWP 20933]

[New LWP 20939]

[New LWP 20932]

[New LWP 20930]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

Core was generated by `/home/exbot/Rob_Soft/caffe/devel/lib/ros_caffe/ros_caffe_test'.

Program terminated with signal SIGSEGV, Segmentation fault.

#0 0x00007fc51da27e0c in cv::merge(cv::_InputArray const&, cv::_OutputArray const&) ()

from /usr/lib/x86_64-linux-gnu/libopencv_core.so.2.4

(gdb)

基本推断,是opencv库乱了,调整环境变量指定。

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)