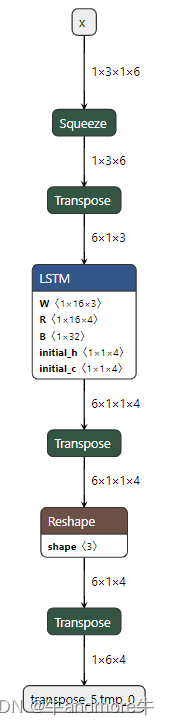

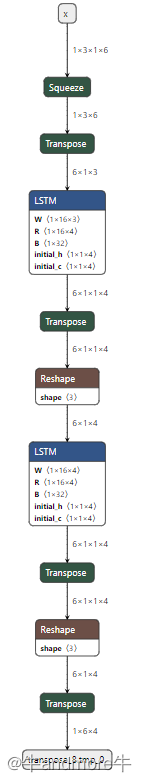

整个过程包括模型定义、导出、转onnx、优化onnx.最后的一个onnx是我们最后需要的onnx,可以查看图。这部分实际包括了paddle生成模型及转Onnx的过程,关于更多的整个流程,请参考博客

import os

import sys

import paddle

from paddle import nn

import numpy as np

from onnxsim import simplify

import onnxoptimizer

import onnx

import onnxruntime

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:36: DeprecationWarning: NEAREST is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.NEAREST or Dither.NONE instead.

'nearest': Image.NEAREST,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:37: DeprecationWarning: BILINEAR is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BILINEAR instead.

'bilinear': Image.BILINEAR,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:38: DeprecationWarning: BICUBIC is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BICUBIC instead.

'bicubic': Image.BICUBIC,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:39: DeprecationWarning: BOX is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BOX instead.

'box': Image.BOX,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:40: DeprecationWarning: LANCZOS is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.LANCZOS instead.

'lanczos': Image.LANCZOS,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/vision/transforms/functional_pil.py:41: DeprecationWarning: HAMMING is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.HAMMING instead.

'hamming': Image.HAMMING

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/onnx/mapping.py:27: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

int(TensorProto.STRING): np.dtype(np.object)

与pytorch的batch_first=True是相同的功能

class One_LSTM_batch(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=False)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((1,1,4))

c0 = paddle.zeros((1,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(0,2,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/One_LSTM_batch"

model = One_LSTM_batch()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

class Two_LSTM_batch(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=False,num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((2,1,4))

c0 = paddle.zeros((2,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(0,2,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/Two_LSTM_batch"

model = Two_LSTM_batch()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

class Bi_Two_LSTM_batch(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=False,direction="bidirect",num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((4,1,4))

c0 = paddle.zeros((4,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(0,2,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/Bi_Two_LSTM_batch"

model = Bi_Two_LSTM_batch()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

2022-08-03 09:39:41 [INFO] ONNX model generated is valid.

2022-08-03 09:39:41 [INFO] ONNX model saved in paddle/One_LSTM_batch.onnx

2022-08-03 09:39:41 [INFO] ONNX model generated is valid.

2022-08-03 09:39:41 [INFO] ONNX model saved in paddle/Two_LSTM_batch.onnx

2022-08-03 09:39:41 [INFO] ONNX model generated is valid.

2022-08-03 09:39:41 [INFO] ONNX model saved in paddle/Bi_Two_LSTM_batch.onnx

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/onnx/numpy_helper.py:93: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

if arr.dtype == np.object:

与pytorch的batch_first=False相同

class One_LSTM_time(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((1,1,4))

c0 = paddle.zeros((1,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(2,0,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/One_LSTM_time"

model = One_LSTM_time()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

class Two_LSTM_time(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=True,num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((2,1,4))

c0 = paddle.zeros((2,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(2,0,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/Two_LSTM_time"

model = Two_LSTM_time()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

class Bi_Two_LSTM_time(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=True,direction="bidirect",num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((4,1,4))

c0 = paddle.zeros((4,1,4))

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(2,0,1))

out,_ = self.rnn(x3,(h0,c0))

return out # shape 1,6,4

model_path = "paddle/Bi_Two_LSTM_time"

model = Bi_Two_LSTM_time()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

2022-08-03 09:40:26 [INFO] ONNX model generated is valid.

2022-08-03 09:40:26 [INFO] ONNX model saved in paddle/One_LSTM_time.onnx

2022-08-03 09:40:26 [INFO] ONNX model generated is valid.

2022-08-03 09:40:26 [INFO] ONNX model saved in paddle/Two_LSTM_time.onnx

2022-08-03 09:40:26 [INFO] ONNX model generated is valid.

2022-08-03 09:40:26 [INFO] ONNX model saved in paddle/Bi_Two_LSTM_time.onnx

对于paddle lstm还有一个参数是 sequence_lens,这个是与pytorch不一样的。sequence_length用于指定time steps不小于sequence_length时, 就给截断了,多余的当做填充元素,只以单层LSTM,time_major=True来做个小试验

class Seq_One_LSTM_time(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

h0 = paddle.zeros((1,1,4))

c0 = paddle.zeros((1,1,4))

sequence_lens = paddle.to_tensor([6]) # same shape to b

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(2,0,1))

out,_ = self.rnn(inputs=x3,initial_states=(h0,c0),sequence_length=sequence_lens)

return out # shape 1,6,4

model_path = "paddle/Seq_One_LSTM_time"

model = Seq_One_LSTM_time()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

W0803 15:08:57.771442 24847 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.4, Runtime API Version: 11.2

W0803 15:08:57.774729 24847 device_context.cc:465] device: 0, cuDNN Version: 8.1.

2022-08-03 15:09:00 [INFO] ONNX model generated is valid.

2022-08-03 15:09:00 [INFO] ONNX model saved in paddle/Seq_One_LSTM_time.onnx

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle2onnx/constant/dtypes.py:47: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

np.bool: core.VarDesc.VarType.BOOL,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle2onnx/constant/dtypes.py:48: DeprecationWarning: `np.float` is a deprecated alias for the builtin `float`. To silence this warning, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

core.VarDesc.VarType.FP32: np.float,

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle2onnx/constant/dtypes.py:53: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

core.VarDesc.VarType.BOOL: np.bool

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated since Python 3.3,and in 3.9 it will stop working

return (isinstance(seq, collections.Sequence) and

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/onnx/helper.py:343: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated since Python 3.3,and in 3.9 it will stop working

is_iterable = isinstance(value, collections.Iterable)

/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages/onnx/numpy_helper.py:93: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

if arr.dtype == np.object:

这一点是指我们在调用lstm的时候不会手动传入初始状态h0和c0,但内部会自动赋值初始状态为全0,pytorch也是这个原理,但是Onnx的结构图是不一样的,pytorch在不传入初始状态时的结构和paddle手动传入的结果是一样的,这个后边再说,综合对比所有的结构就可以看出差异

class Ini_One_LSTM_time(nn.Layer):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,time_major=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = paddle.reshape(x,[b,c,h*w])

x2 = paddle.squeeze(x,2)

x3 = paddle.transpose(x2,(2,0,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model_path = "paddle/Ini_One_LSTM_time"

model = Ini_One_LSTM_time()

model.eval()

infer_shape = [1,3,1,6]

input_spec=paddle.static.InputSpec(shape=infer_shape, dtype="float32")

paddle.onnx.export(model,model_path,input_spec=[input_spec],opset_version=11,enable_onnx_checker=True)

model = onnx.load(model_path+'.onnx')

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = model_path+"_sim.onnx"

onnx.save(model_sim,save_path)

model = onnx.load(save_path)

if model.ir_version<4:

print("Model with ir_version below 4 requires to in clude initializer in graph input")

exit()

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name]=input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

passes=["extract_constant_to_initializer","eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model,passes)

save_path = model_path+"_sim_opt.onnx"

onnx.save(optimized_model,save_path)

2022-08-03 15:06:50 [INFO] ONNX model generated is valid.

2022-08-03 15:06:50 [INFO] ONNX model saved in paddle/Ini_One_LSTM_time.onnx

paddle_onnx = sorted(os.listdir('paddle'))

paddle_onnx_paths = sorted([os.path.join('paddle',path) for path in paddle_onnx])

print(paddle_onnx)

['Bi_Two_LSTM_batch.onnx', 'Bi_Two_LSTM_batch_sim.onnx', 'Bi_Two_LSTM_batch_sim_opt.onnx', 'Bi_Two_LSTM_time.onnx', 'Bi_Two_LSTM_time_sim.onnx', 'Bi_Two_LSTM_time_sim_opt.onnx', 'Ini_One_LSTM_time.onnx', 'Ini_One_LSTM_time_sim.onnx', 'Ini_One_LSTM_time_sim_opt.onnx', 'One_LSTM_batch.onnx', 'One_LSTM_batch_sim.onnx', 'One_LSTM_batch_sim_opt.onnx', 'One_LSTM_time.onnx', 'One_LSTM_time_sim.onnx', 'One_LSTM_time_sim_opt.onnx', 'Seq_One_LSTM_time.onnx', 'Seq_One_LSTM_time_sim.onnx', 'Seq_One_LSTM_time_sim_opt.onnx', 'Two_LSTM_batch.onnx', 'Two_LSTM_batch_sim.onnx', 'Two_LSTM_batch_sim_opt.onnx', 'Two_LSTM_time.onnx', 'Two_LSTM_time_sim.onnx', 'Two_LSTM_time_sim_opt.onnx']

# 查看每个模型的大小

! du -sh paddle/*

16K paddle/Bi_Two_LSTM_batch.onnx

8.0K paddle/Bi_Two_LSTM_batch_sim.onnx

8.0K paddle/Bi_Two_LSTM_batch_sim_opt.onnx

16K paddle/Bi_Two_LSTM_time.onnx

8.0K paddle/Bi_Two_LSTM_time_sim.onnx

8.0K paddle/Bi_Two_LSTM_time_sim_opt.onnx

8.0K paddle/Ini_One_LSTM_time.onnx

4.0K paddle/Ini_One_LSTM_time_sim.onnx

4.0K paddle/Ini_One_LSTM_time_sim_opt.onnx

8.0K paddle/One_LSTM_batch.onnx

4.0K paddle/One_LSTM_batch_sim.onnx

4.0K paddle/One_LSTM_batch_sim_opt.onnx

8.0K paddle/One_LSTM_time.onnx

4.0K paddle/One_LSTM_time_sim.onnx

4.0K paddle/One_LSTM_time_sim_opt.onnx

8.0K paddle/Seq_One_LSTM_time.onnx

4.0K paddle/Seq_One_LSTM_time_sim.onnx

4.0K paddle/Seq_One_LSTM_time_sim_opt.onnx

12K paddle/Two_LSTM_batch.onnx

4.0K paddle/Two_LSTM_batch_sim.onnx

4.0K paddle/Two_LSTM_batch_sim_opt.onnx

12K paddle/Two_LSTM_time.onnx

4.0K paddle/Two_LSTM_time_sim.onnx

4.0K paddle/Two_LSTM_time_sim_opt.onnx

加载onnx模型并推理,对比推理结果,两两一对

def onnx_infer(model_path,data):

"""_summary_

Args:

model_path (_type_): _description_

data (_type_): _description_

"""

onnx_session=onnxruntime.InferenceSession(model_path)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

result = onnx_session.run([output_name],{input_name:data})

return result[0]

test_data = np.random.random((1,3,1,6)).astype(np.float32) # batch,channel,height,width

results={}

for i,onnx_path in enumerate(paddle_onnx_paths):

result = onnx_infer(onnx_path,test_data)

results[os.path.basename(onnx_path)]=result

if i%3 ==2:

try:

values = list(results.values())

np.testing.assert_allclose(values[0],values[1],rtol=1e-5)

np.testing.assert_allclose(values[2],values[1],rtol=1e-5)

print(f"{list(results.keys())} have same results")

except:

print(f"{list(results.keys())} have different results")

finally:

results={}

2022-08-03 17:03:35.322915835 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322938940 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322945528 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322951708 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322957288 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322963180 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322968668 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322974083 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_14 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322979304 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_17 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322984535 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_26 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322990949 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_27 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.322996580 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_87 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392730468 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392757571 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392764542 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392770328 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392775836 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392781517 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392786892 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392792184 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_14 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392797446 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_17 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392802640 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_26 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392808940 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_27 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.392814520 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_87 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482187472 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_0 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482211960 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_0 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482218895 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482224351 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_1 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482229628 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_6 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482235089 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_7 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482240251 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_10 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482245348 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482250361 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_45 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482255449 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_46 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482267806 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_47 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482273280 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_48 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482278188 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_49 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.482283068 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_50 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544810887 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544836135 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544842815 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544848451 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544853781 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.544859396 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

['Bi_Two_LSTM_batch.onnx', 'Bi_Two_LSTM_batch_sim.onnx', 'Bi_Two_LSTM_batch_sim_opt.onnx'] have same results

['Bi_Two_LSTM_time.onnx', 'Bi_Two_LSTM_time_sim.onnx', 'Bi_Two_LSTM_time_sim_opt.onnx'] have same results

['Ini_One_LSTM_time.onnx', 'Ini_One_LSTM_time_sim.onnx', 'Ini_One_LSTM_time_sim_opt.onnx'] have same results

['One_LSTM_batch.onnx', 'One_LSTM_batch_sim.onnx', 'One_LSTM_batch_sim_opt.onnx'] have same results

['One_LSTM_time.onnx', 'One_LSTM_time_sim.onnx', 'One_LSTM_time_sim_opt.onnx'] have same results

['Seq_One_LSTM_time.onnx', 'Seq_One_LSTM_time_sim.onnx', 'Seq_One_LSTM_time_sim_opt.onnx'] have same results

['Two_LSTM_batch.onnx', 'Two_LSTM_batch_sim.onnx', 'Two_LSTM_batch_sim_opt.onnx'] have same results

['Two_LSTM_time.onnx', 'Two_LSTM_time_sim.onnx', 'Two_LSTM_time_sim_opt.onnx'] have same results

2022-08-03 17:03:35.627142152 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.627166154 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.627172672 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.627178399 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.627184004 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.627189596 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.680342507 [W:onnxruntime:, graph.cc:3559 CleanUnusedInitializersAndNodeArgs] Removing initializer 'assign_0.tmp_0'. It is not used by any node and should be removed from the model.

2022-08-03 17:03:35.684640360 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.684662133 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.684672193 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.684680922 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.684688933 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.684697379 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710238396 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710278276 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710292209 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710303902 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710315317 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710326920 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710337914 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710348837 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_14 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710359584 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_17 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710370872 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_26 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710383823 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_27 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.710395467 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_87 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810340323 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_4 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810366323 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_5 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810374143 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_8 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810380074 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_12 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810385473 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810391336 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_44 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810396558 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_13 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810401858 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_14 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810407006 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Concat_17 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810412186 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_26 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810417317 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Slice_27 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

2022-08-03 17:03:35.810424180 [W:onnxruntime:, graph.cc:1271 Graph] Initializer Constant_87 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

以上onnx模型的推理可以看到在1e-5(十万分之一,6位有效数字)的容差下,结果一完全一样的。关于那么多warning,是sim后缀的模型产生的,原始模型和opt结尾的模型没有这个问题

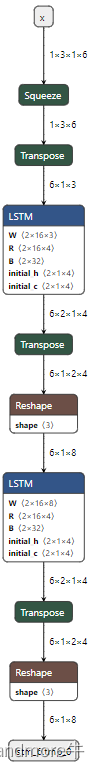

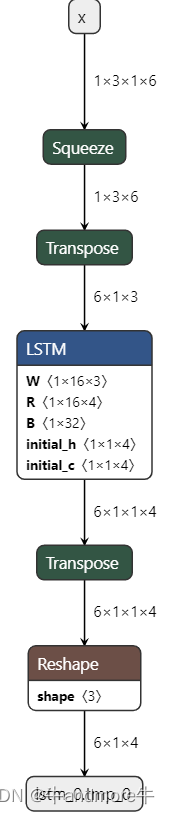

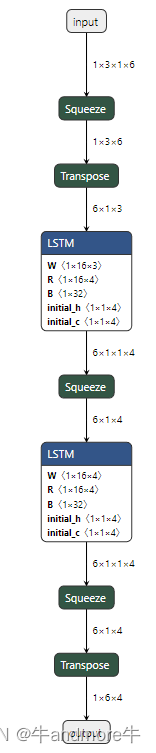

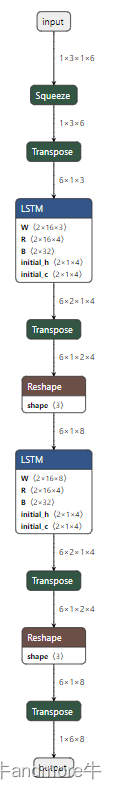

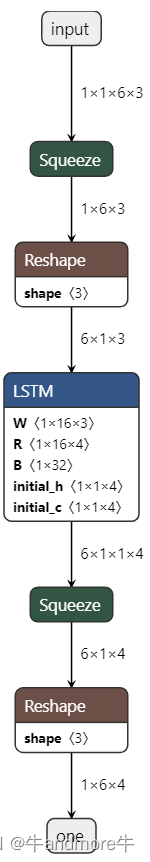

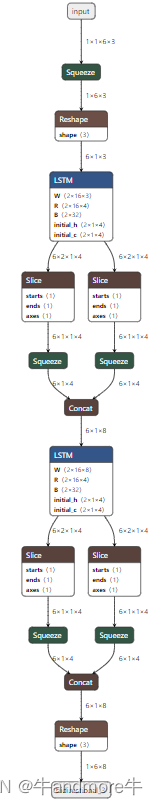

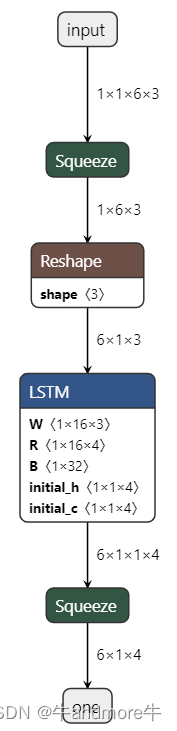

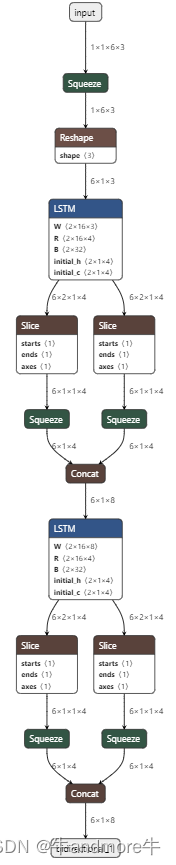

以下部分是生成的以opt结尾的onnx模型的结构图:

| 类型 | 单层 | 双层 | 双层双向 |

|---|---|---|---|

| time major=False |  |  |  |

| time_major=True |  |  |  |

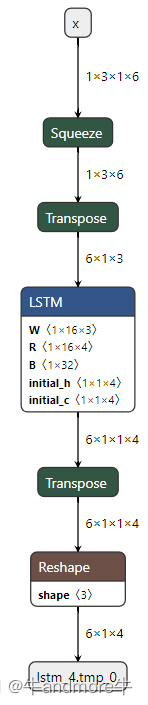

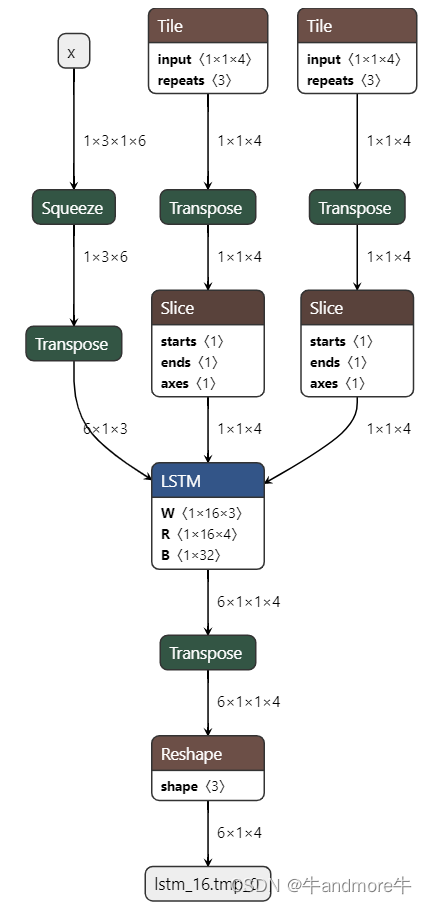

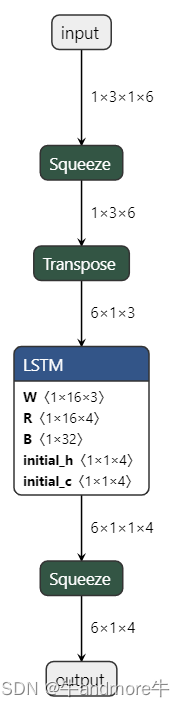

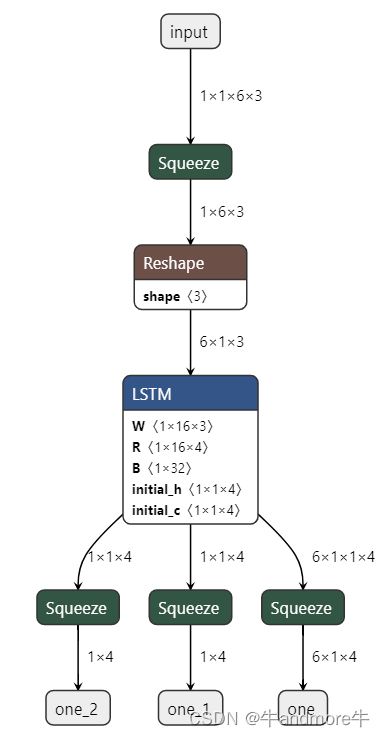

接着,是sequence_lens这个参数的影响,只是一个单层lstm,结果图是:

还有一个是无自定义初始状态的单层lstm的图,如下:

可以看到会有增加的算子,这部分其实是没必要的。

由于pytorch在导出onnx时,参数keep_initializers_as_inputs=False,所以只需要执行sim操作即可,否则要和paddle一样,多执行一个操作

import os

import sys

sys.path.append('/home/tl/anaconda3/envs/pdle/lib/python3.7/site-packages')

import torch

from torch import nn

import numpy as np

from onnxsim import simplify

import onnxoptimizer

import onnx

import onnxruntime

class One_LSTM_batch(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(0,2,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = One_LSTM_batch()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/One_lstm_batch.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True,keep_initializers_as_inputs=False,input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

class Two_LSTM_batch(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=True,num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(0,2,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = Two_LSTM_batch()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/Two_lstm_batch.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, keep_initializers_as_inputs=False,input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

class Bi_Two_LSTM_batch(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=True,num_layers=2,bidirectional=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(0,2,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = Bi_Two_LSTM_batch()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/Bi_Two_lstm_batch.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, keep_initializers_as_inputs=False,input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

output shape: torch.Size([1, 6, 4])

export onnx to: torch/One_lstm_batch.onnx

output shape: torch.Size([1, 6, 4])

export onnx to: torch/Two_lstm_batch.onnx

/home/tl/anaconda3/envs/ptch/lib/python3.7/site-packages/torch/onnx/symbolic_opset9.py:2192: UserWarning: Exporting a model to ONNX with a batch_size other than 1, with a variable length with LSTM can cause an error when running the ONNX model with a different batch size. Make sure to save the model with a batch size of 1, or define the initial states (h0/c0) as inputs of the model.

"or define the initial states (h0/c0) as inputs of the model. ")

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

output shape: torch.Size([1, 6, 8])

export onnx to: torch/Bi_Two_lstm_batch.onnx

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

有一些warning,所以最好也可以手动传入参数

class One_LSTM_time(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=False)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(2,0,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = One_LSTM_time()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/One_lstm_time.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

class Two_LSTM_time(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=False,num_layers=2)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(2,0,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = Two_LSTM_time()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/Two_lstm_time.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

class Bi_Two_LSTM_time(nn.Module):

def __init__(self,in_channels=3,out_channels=4):

super().__init__()

self.rnn = nn.LSTM(in_channels,out_channels,batch_first=False,num_layers=2,bidirectional=True)

def forward(self,x):

# b,c,h,w =x.shape

# x1 = torch.reshape(x,[b,c,h*w])

x2 = torch.squeeze(x,2)

x3 = torch.permute(x2,(2,0,1))

out,_ = self.rnn(x3)

return out # shape 1,6,4

model = Bi_Two_LSTM_time()

model.to('cpu')

model.eval()

input = torch.randn(1,3,1,6)

output = model(input)

print("output shape:",output.shape)

input_shapes=[(1,3,1,6)]

onnx_export_path = "torch/Bi_Two_lstm_time.onnx"

dummy_input=[]

for ele in input_shapes:

dummy_input.append(torch.randn(ele))

dummy_input=tuple(dummy_input)

# torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"], dynamic_axes={'input' : {0 : 'batch_size'},'output' : {0 : 'batch_size'}})

torch.onnx.export(model, dummy_input, onnx_export_path,export_params=True,verbose=False, opset_version=11,do_constant_folding=True, input_names=["input"], output_names=["output"])

print("export onnx to:",onnx_export_path)

onnx_model = onnx.load(onnx_export_path)

model_sim ,check = simplify(onnx_model)

assert check,"simplified onnx model could not be validated"

save_path = os.path.splitext(onnx_export_path)[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

output shape: torch.Size([6, 1, 4])

export onnx to: torch/One_lstm_time.onnx

output shape: torch.Size([6, 1, 4])

export onnx to: torch/Two_lstm_time.onnx

/home/tl/anaconda3/envs/ptch/lib/python3.7/site-packages/torch/onnx/symbolic_opset9.py:2192: UserWarning: Exporting a model to ONNX with a batch_size other than 1, with a variable length with LSTM can cause an error when running the ONNX model with a different batch size. Make sure to save the model with a batch size of 1, or define the initial states (h0/c0) as inputs of the model.

"or define the initial states (h0/c0) as inputs of the model. ")

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

output shape: torch.Size([6, 1, 8])

export onnx to: torch/Bi_Two_lstm_time.onnx

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

WARNING: The shape inference of prim::Constant type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

pytorch_onnx = sorted(os.listdir('torch'))

pytorch_onnx_paths = sorted([os.path.join('torch',path) for path in pytorch_onnx])

print(pytorch_onnx)

['Bi_Two_lstm_batch.onnx', 'Bi_Two_lstm_batch_sim.onnx', 'Bi_Two_lstm_time.onnx', 'Bi_Two_lstm_time_sim.onnx', 'One_lstm_batch.onnx', 'One_lstm_batch_sim.onnx', 'One_lstm_time.onnx', 'One_lstm_time_sim.onnx', 'Two_lstm_batch.onnx', 'Two_lstm_batch_sim.onnx', 'Two_lstm_time.onnx', 'Two_lstm_time_sim.onnx']

! du -sh torch/*

8.0K torch/Bi_Two_lstm_batch.onnx

8.0K torch/Bi_Two_lstm_batch_sim.onnx

8.0K torch/Bi_Two_lstm_time.onnx

8.0K torch/Bi_Two_lstm_time_sim.onnx

4.0K torch/One_lstm_batch.onnx

4.0K torch/One_lstm_batch_sim.onnx

4.0K torch/One_lstm_time.onnx

4.0K torch/One_lstm_time_sim.onnx

8.0K torch/Two_lstm_batch.onnx

4.0K torch/Two_lstm_batch_sim.onnx

8.0K torch/Two_lstm_time.onnx

4.0K torch/Two_lstm_time_sim.onnx

def onnx_infer(model_path,data):

"""_summary_

Args:

model_path (_type_): _description_

data (_type_): _description_

"""

onnx_session=onnxruntime.InferenceSession(model_path)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

result = onnx_session.run([output_name],{input_name:data})

return result[0]

test_data = np.random.random((1,3,1,6)).astype(np.float32) # batch,channel,height,width

results={}

for i,onnx_path in enumerate(pytorch_onnx_paths):

result = onnx_infer(onnx_path,test_data)

results[os.path.basename(onnx_path)]=result

if i%2 ==1:

try:

values = list(results.values())

np.testing.assert_allclose(values[0],values[1],rtol=1e-7)

print(f"{list(results.keys())} have same results")

except:

print(f"{list(results.keys())} have different results")

finally:

results={}

['Bi_Two_lstm_batch.onnx', 'Bi_Two_lstm_batch_sim.onnx'] have same results

['Bi_Two_lstm_time.onnx', 'Bi_Two_lstm_time_sim.onnx'] have same results

['One_lstm_batch.onnx', 'One_lstm_batch_sim.onnx'] have same results

['One_lstm_time.onnx', 'One_lstm_time_sim.onnx'] have same results

['Two_lstm_batch.onnx', 'Two_lstm_batch_sim.onnx'] have same results

['Two_lstm_time.onnx', 'Two_lstm_time_sim.onnx'] have same results

看起来pytorch转换成onnx在1e-7的精度下结果完全相同,相比paddle精度还是高一点

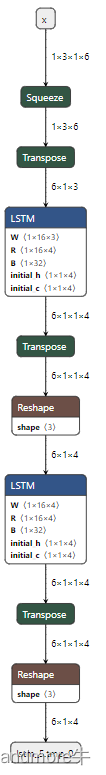

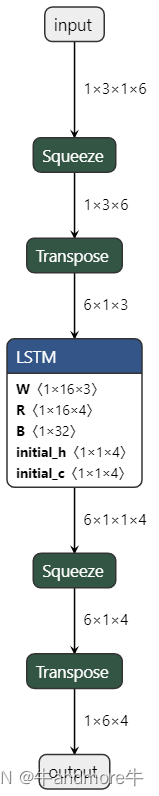

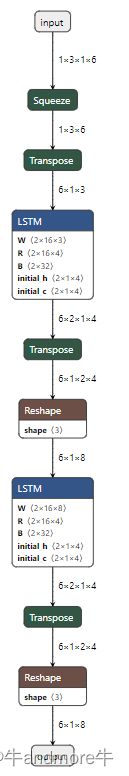

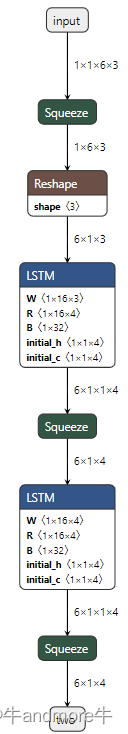

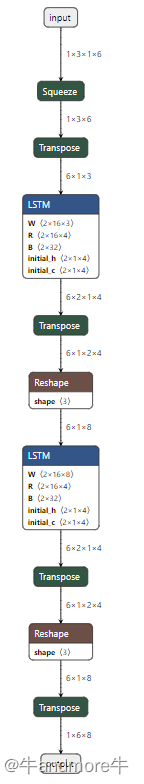

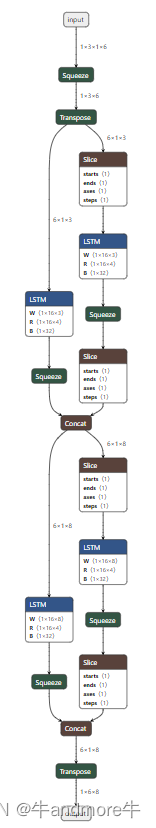

查看一下onnx的图

| 类型 | 单层 | 双层 | 双层双向 |

|---|---|---|---|

| batch_first=True |  |  |  |

| batch_first=False |  |  |  |

在这里我使用的是tensorflow2.8版本。

import os

import tensorflow as tf

import onnx

import tf2onnx

from onnxsim import simplify

import onnxruntime

import numpy as np

from tensorflow.keras import layers as nn

#only use cpu

devices = tf.config.list_physical_devices("CPU")

tf.config.set_visible_devices(devices)

2022-08-09 16:10:42.419611: I tensorflow/core/util/util.cc:169] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

因为tensorflow和pytorch默认是返回每一步的output的,而tensorflow是可以指定返回最后一步还是全部,由reture_sequences来决定,为了保持一致,设置为True.

tensorflow的是初始输入是格式是B,H,W,C,以此为基础进行构建

def One_LSTM_batch():

input = nn.Input(shape=[1,6,3],batch_size=1,name="input")

middle = tf.squeeze(input,axis=1)

output = nn.LSTM(4,time_major=False,return_sequences=True,name='one')(middle)

model = tf.keras.models.Model(input,output,name="One_LSTM_batch")

return model

model = One_LSTM_batch()

#tf.keras.utils.plot_model(model,to_file=f'tensorflow/{model.name}.png',show_shapes=True,show_layer_names=True,show_dtype=True)

model.save("tensorflow/One_LSTM_batch")

spec = (tf.TensorSpec((1,1,6,3),tf.float32,name="input"),)

output_path="tensorflow/"+model.name+'.onnx'

model_proto,_=tf2onnx.convert.from_keras(model,input_signature=spec,opset=11,output_path=output_path)

output_names=[n.name for n in model_proto.graph.output]

model = onnx.load(output_path)

model_sim ,check = simplify(model)

assert check,"simplified onnx model could not be validated"

save_path = output_path.split('.')[0]+"_sim.onnx"

onnx.save(model_sim,save_path)

def Two_LSTM_batch():