1、上代码

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# 载入数据

"""one_hot参数把标签转化到0-1之间

"""

mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

# 每个批次大小(每次放入训练图像数量)

batch_size = 100

# 批次数量

num_batch = mnist.train.num_examples // batch_size

# 参数概要

def variable_summaries(var):

with tf.name_scope('summaries'):

mean = tf.reduce_mean(var)

# 均值

tf.summary.scalar('mean', mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

# 标准差

tf.summary.scalar('stddev', stddev)

tf.summary.scalar('max', tf.reduce_max(var))

tf.summary.scalar('min', tf.reduce_min(var))

# 直方图

tf.summary.histogram('histogram', var)

with tf.name_scope('input'):

# placeholder

x = tf.placeholder(tf.float32, [None, 784], name='x-input')

y = tf.placeholder(tf.float32, [None, 10], name='y-input')

with tf.name_scope('keep_prob_And_learning_rate'):

keep_prob = tf.placeholder(tf.float32, name='keep_prob')

lr = tf.Variable(0.001, dtype=tf.float32, name='learning_rate')

tf.summary.scalar('learning_rate', lr)

with tf.name_scope('layer'):

with tf.name_scope('net-one'):

with tf.name_scope('wights-one'):

w1 = tf.Variable(tf.truncated_normal([784, 1000], stddev=0.1), name='w1')

variable_summaries(w1)

with tf.name_scope('biases-one'):

b1 = tf.Variable(tf.zeros([1000]) + 0.1, name='b1')

variable_summaries(b1)

with tf.name_scope('drop-one'):

L1 = tf.nn.tanh(tf.matmul(x, w1) + b1)

L1_drop = tf.nn.dropout(L1, keep_prob)

with tf.name_scope('net-two'):

with tf.name_scope('wights-two'):

w2 = tf.Variable(tf.truncated_normal([1000, 500], stddev=0.1), name='w2')

variable_summaries(w2)

with tf.name_scope('biases-two'):

b2 = tf.Variable(tf.zeros([500]) + 0.1, name='b2')

variable_summaries(b2)

with tf.name_scope('drop-two'):

L2 = tf.nn.tanh(tf.matmul(L1_drop, w2) + b2)

L2_drop = tf.nn.dropout(L2, keep_prob)

with tf.name_scope('net-three'):

with tf.name_scope('wights-three'):

w3 = tf.Variable(tf.truncated_normal([500, 100], stddev=0.1), name='w3')

variable_summaries(w3)

with tf.name_scope('biases-three'):

b3 = tf.Variable(tf.zeros([100]) + 0.1, name='b3')

variable_summaries(b3)

with tf.name_scope('drop-three'):

L3 = tf.nn.tanh(tf.matmul(L2_drop, w3) + b3)

L3_drop = tf.nn.dropout(L3, keep_prob)

with tf.name_scope('net-four-output'):

with tf.name_scope('wights-four-final'):

w4 = tf.Variable(tf.truncated_normal([100, 10], stddev=0.1), name='w4')

variable_summaries(w4)

with tf.name_scope('biases-four-final'):

b4 = tf.Variable(tf.zeros([10]) + 0.1, name='b4')

variable_summaries(b4)

with tf.name_scope('prediction'):

prediction = tf.nn.softmax(tf.matmul(L3_drop, w4) + b4) # 概率值转化: softmax()

with tf.name_scope('loss'):

# loss = tf.reduce_mean(tf.square(y - prediction)) # 二次代价函数

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y, logits=prediction)) # 交叉熵代价函数

tf.summary.scalar('loss', loss)

with tf.name_scope('train'):

# train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

# train_step = tf.train.AdadeltaOptimizer(learning_rate=0.2, rho=0.95).minimize(loss)

train_step = tf.train.AdamOptimizer(lr).minimize(loss)

init = tf.global_variables_initializer()

with tf.name_scope("accuracy-total"):

with tf.name_scope("correct_prediction"):

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(prediction, 1)) # argmax()返回一维张量中最大值所在的位置

# 计算准确率

"""cast()将correct_prediction列表变量中的值转换成float32 --> true=1.0,false=0.0

"""

with tf.name_scope("accuracy"):

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) # cast()相当于类型转换函数

tf.summary.scalar('accuracy', accuracy)

# 合并summary

merged = tf.summary.merge_all()

with tf.Session() as sess:

sess.run(init)

writer = tf.summary.FileWriter('logs/', sess.graph)

for epoch in range(51):

sess.run(tf.assign(lr, 0.001 * (0.95 ** epoch))) # tf.assign()属于赋值操作

for batch in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

summary, _ = sess.run([merged, train_step], feed_dict={x: batch_xs, y: batch_ys, keep_prob: 0.9})

writer.add_summary(summary, epoch)

test_acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels, keep_prob: 1.0})

train_acc = sess.run(accuracy, feed_dict={x: mnist.train.images, y: mnist.train.labels, keep_prob: 1.0})

learning_rate = sess.run(lr)

print('iter' + str(epoch) + ', testing accuracy:' + str(test_acc) + ', training accuracy:' + str(train_acc)

+ ', learning rate:' + str(learning_rate))

2、dropout原理认识

参考:https://blog.csdn.net/program_developer/article/details/80737724

3、tensorboard可视化

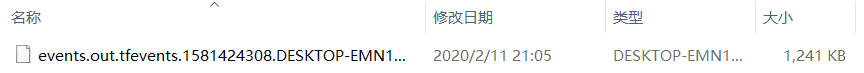

" writer = tf.summary.FileWriter(‘logs/’, sess.graph) "记录了logs存储的路径,在所设置的路径下可以找到这样的文件:

打开终端进入到该文件位置,输入tensorboard --logdir=.\命令(比如我的文件在D:\PycharmProject\StudyDemo\logs下):

在chrome中输入最后一行的:http:// … …

得到可视化数据和网络结构