| 序号 | 主机 | 系统 | 配置 | 备注 |

| 1 | controller: 192.168.3.121 | ubuntu22.04 | 硬盘100G | |

| 2 | compute1: 192.168.3.122 | ubuntu22.04 | 硬盘100G | |

| 3 | compute2: 192.168.3.123 | ubuntu22.04 | 硬盘100G | |

| 4 | block1: 192.168.3.124 | ubuntu22.04 | sda:50g、sdb:20g、sdc:20g | |

| 5 | object1: 192.168.3.126 | ubuntu22.04 | sda:50g、sdb:20g、sdc:20g | |

| 6 | object2: 192.168.3.127 | ubuntu22.04 | sda:50g、sdb:20g、sdc:20g | |

一、基础环境(六台)

1、关闭防火墙及修改内核

sudo -i

ufw disable

echo 'net.bridge.bridge-nf-call-ip6tables = 1' >> /etc/sysctl.d/99-sysctl.conf

echo 'net.bridge.bridge-nf-call-iptables = 1' >> /etc/sysctl.d/99-sysctl.conf

modprobe br_netfilter

sysctl --system

2、修改 /etc/hosts

echo '192.168.3.121 controller' >> /etc/hosts

echo '192.168.3.122 compute1' >> /etc/hosts

echo '192.168.3.123 compute2' >> /etc/hosts

echo '192.168.3.124 block1' >> /etc/hosts

echo '192.168.3.126 object1' >> /etc/hosts

echo '192.168.3.127 object2' >> /etc/hosts

3、安装软件包

apt update

apt install -y net-tools bash-completion python3-openstackclient wget chrony

二、openstack安装(controller)

1、数据库安装

apt install mariadb-server python3-pymysql -y

cat > /etc/mysql/mariadb.conf.d/99-openstack.cnf << EOF

[mysqld]

bind-address = 192.168.3.121

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

service mysql restart

mysql_secure_installation

netstat -antup | grep mariadb

2、安装rabbitmq

apt install rabbitmq-server -y

rabbitmqctl add_user openstack password

rabbitmqctl set_user_tags openstack administrator

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

rabbitmqctl list_user_permissions openstack

rabbitmq-plugins enable rabbitmq_management

netstat -antup | grep 5672

http://192.168.3.121:15672

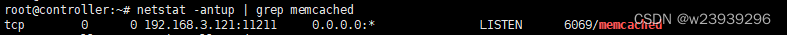

3、安装memcached

apt install memcached python3-memcache -y

sed -i 's/-l 127.0.0.1/-l 192.168.3.121/g' /etc/memcached.conf

service memcached restart

netstat -antup | grep memcached

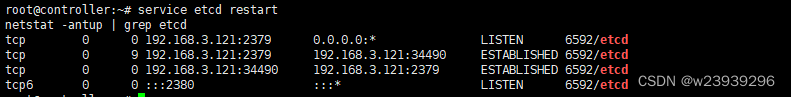

4、安装etcd

apt install etcd -y

cat > /etc/default/etcd << EOF

ETCD_NAME="controller"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER="controller=http://192.168.3.121:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.3.121:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.3.121:2379"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.3.121:2379"

EOF

service etcd restart

netstat -antup | grep etcd

5、安装keystone

mysql

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

apt install keystone -y

vim /etc/keystone/keystone.conf

修改如下

[database]

connection = mysql+pymysql://keystone:password@controller/keystone

[token]

provider = fernet

su -s /bin/sh -c "keystone-manage db_sync" keystone

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

keystone-manage bootstrap --bootstrap-password password \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

echo 'ServerName controller' >> /etc/apache2/apache2.conf

service apache2 restart

export OS_USERNAME=admin

export OS_PASSWORD=password

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

openstack domain create --description "An Example Domain" example

openstack project create --domain default --description "Service Project" service

openstack project create --domain default --description "Demo Project" myproject

openstack user create --domain default --password-prompt myuser

openstack role create myrole

openstack role add --project myproject --user myuser myrole

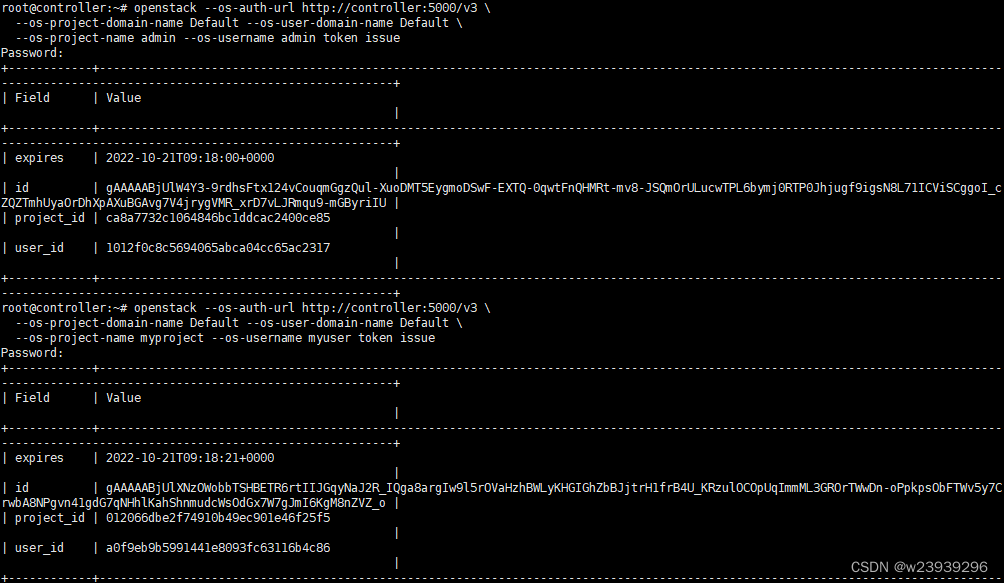

验证

unset OS_AUTH_URL OS_PASSWORD

openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name myproject --os-username myuser token issue

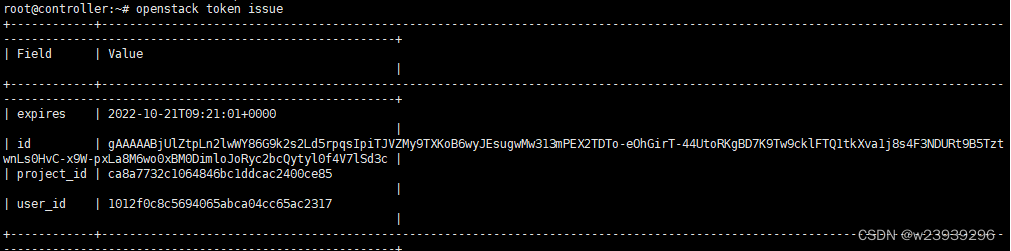

cat > admin-openrc << EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=password

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

cat > myuser-openrc << EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=password

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

source admin-openrc

openstack token issue

6、安装glance

mysql

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

. admin-openrc

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

apt install glance -y

vim /etc/glance/glance-api.conf

修改如下

[database]

connection = mysql+pymysql://glance:password@controller/glance

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = password

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

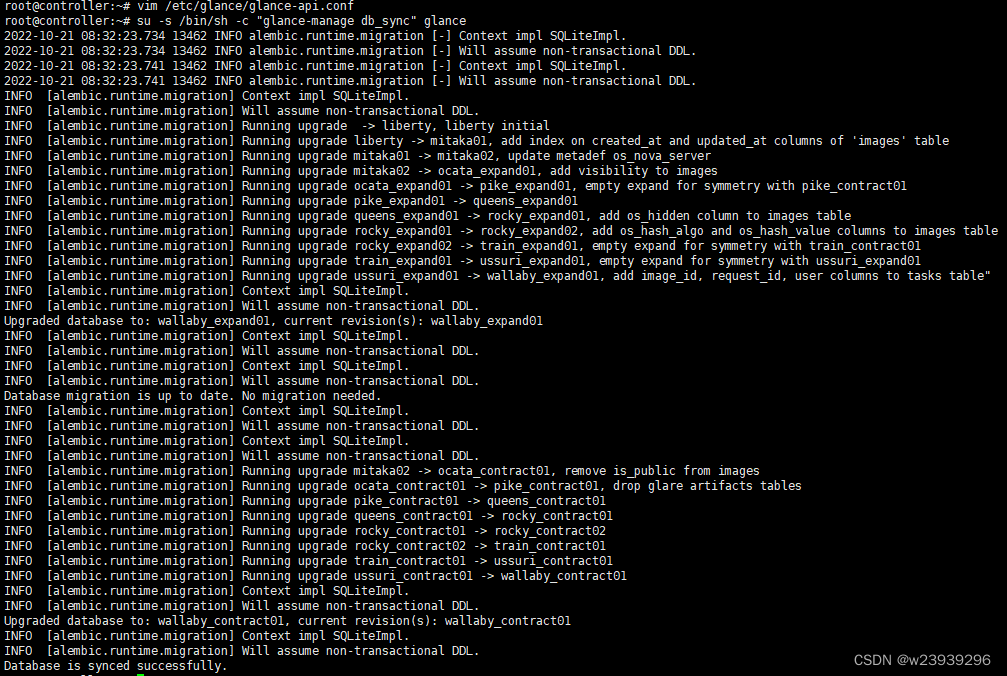

su -s /bin/sh -c "glance-manage db_sync" glance

service glance-api restart

验证

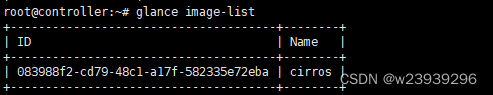

. admin-openrc

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

glance image-create --name "cirros" --file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare --visibility=public

glance image-list

7、安装placement

mysql

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

. admin-openrc

openstack user create --domain default --password-prompt placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

apt install placement-api -y

vim /etc/placement/placement.conf

增加如下

[placement_database]

connection = mysql+pymysql://placement:password@controller/placement

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = password

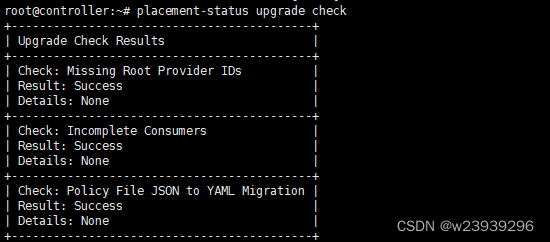

su -s /bin/sh -c "placement-manage db sync" placement

service apache2 restart

验证

. admin-openrc

placement-status upgrade check

8、安装 nova

controller

mysql

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

. admin-openrc

openstack user create --domain default --password-prompt nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

apt install nova-api nova-conductor nova-novncproxy nova-scheduler -y

vim /etc/nova/nova.conf

增加

[DEFAULT]

transport_url = rabbit://openstack:password@controller:5672/

my_ip = 192.168.3.121

[api_database]

connection = mysql+pymysql://nova:password@controller/nova_api

[database]

connection = mysql+pymysql://nova:password@controller/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = password

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = password

[scheduler]

discover_hosts_in_cells_interval = 300

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

service nova-api restart

service nova-scheduler restart

service nova-conductor restart

service nova-novncproxy restart

compute1

apt install nova-compute -y

vim /etc/nova/nova.conf

[DEFAULT]

transport_url = rabbit://openstack:password@controller

my_ip = 192.168.3.122

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = password

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = password

service nova-compute restart

compute2

apt install nova-compute -y

vim /etc/nova/nova.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

my_ip = 192.168.3.123

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = password

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = password

service nova-compute restart

controller

. admin-openrc

openstack compute service list --service nova-compute

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

验证

openstack compute service list

openstack catalog list

openstack image list

nova-status upgrade check

9、安装neutron

controller

mysql

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

. admin-openrc

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

apt install neutron-server neutron-plugin-ml2 \

neutron-linuxbridge-agent neutron-l3-agent neutron-dhcp-agent \

neutron-metadata-agent -y

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:password@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[database]

connection = mysql+pymysql://neutron:password@controller/neutron

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = password

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = password

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

vim /etc/neutron/plugins/ml2/ml2_conf.ini

增加如下:

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

增加如下:

[linux_bridge]

physical_interface_mappings = provider:ens33

[vxlan]

enable_vxlan = true

local_ip = 192.168.3.121

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

vim /etc/neutron/l3_agent.ini

增加如下:

[DEFAULT]

interface_driver = linuxbridge

vim /etc/neutron/dhcp_agent.ini

增加如下:

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

vim /etc/neutron/metadata_agent.ini

增加如下:

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = password

vim /etc/nova/nova.conf

增加如下:

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = password

service_metadata_proxy = true

metadata_proxy_shared_secret = password

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

service nova-api restart

service neutron-server restart

service neutron-linuxbridge-agent restart

service neutron-dhcp-agent restart

service neutron-metadata-agent restart

service neutron-l3-agent restart

compute1

apt install neutron-linuxbridge-agent -y

vim /etc/neutron/neutron.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = password

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

增加如下:

[linux_bridge]

physical_interface_mappings = provider:ens33

[vxlan]

enable_vxlan = true

local_ip = 192.168.3.122

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

vim /etc/nova/nova.conf

增加如下:

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = password

service nova-compute restart

service neutron-linuxbridge-agent restart

compute2

apt install neutron-linuxbridge-agent -y

vim /etc/neutron/neutron.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = password

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

增加如下:

[linux_bridge]

physical_interface_mappings = provider:ens33

[vxlan]

enable_vxlan = true

local_ip = 192.168.3.123

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

vim /etc/nova/nova.conf

增加如下:

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = password

service nova-compute restart

service neutron-linuxbridge-agent restart

验证

controller

. admin-openrc

openstack network agent list

10、安装dashboard

apt install openstack-dashboard -y

vim /etc/openstack-dashboard/local_settings.py

改 OPENSTACK_HOST = "127.0.0.1" 为 OPENSTACK_HOST = "192.168.3.121"

改 CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

为 CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

改 TIME_ZONE = "TIME_ZONE" 为 TIME_ZONE = "Asia/Shanghai"

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

systemctl reload apache2.service

service apache2 restart

http://192.168.3.121/horizon

注: 若报错则改

vim /etc/openstack-dashboard/local_settings.py

改OPENSTACK_KEYSTONE_URL = “http://%s:5000” % OPENSTACK_HOST 为

OPENSTACK_KEYSTONE_URL = "http://%s:5000" % OPENSTACK_HOST

11、 创建实例

source admin-openrc

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

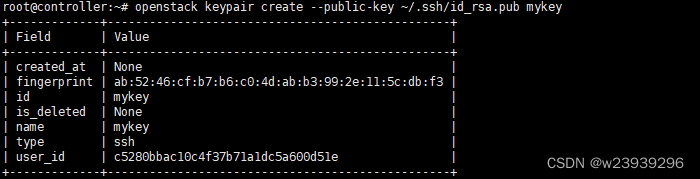

. myuser-openrc

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

source admin-openrc

openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider

openstack subnet create --network provider \

--allocation-pool start=192.168.3.230,end=192.168.3.248 \

--dns-nameserver 192.168.3.1 --gateway 192.168.3.1 \

--subnet-range 192.168.3.0/24 provider

source myuser-openrc

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

openstack network create selfservice

openstack subnet create --network selfservice \

--dns-nameserver 192.168.3.1 --gateway 192.168.10.1 \

--subnet-range 192.168.10.0/24 selfservice

openstack router create router

openstack router add subnet router selfservice

openstack router set router --external-gateway provider

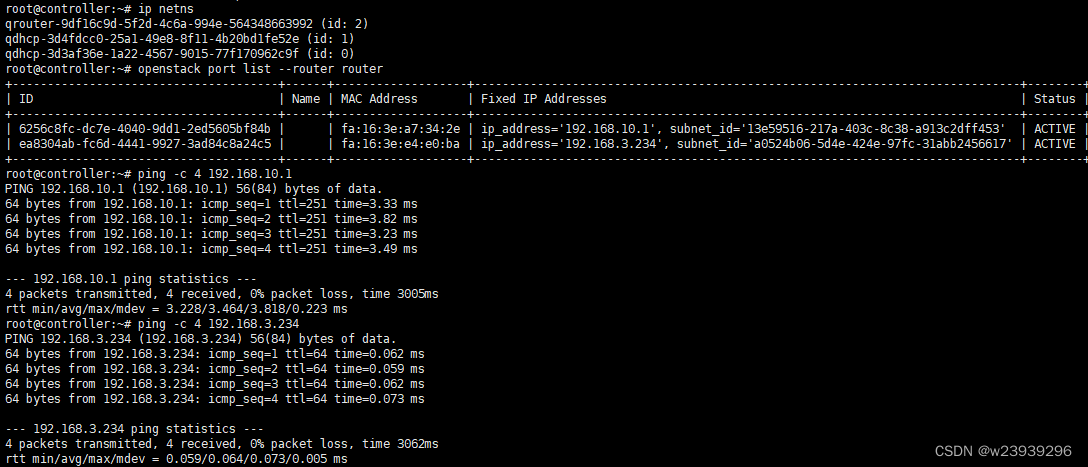

检查:

source admin-openrc

ip netns

openstack port list --router router

source myuser-openrc

openstack flavor list

openstack image list

openstack network list

openstack security group list

openstack keypair list

创建实例:ID为selfservice - ID

openstack server create --flavor m1.nano --image cirros \

--nic net-id=3d4fdcc0-25a1-49e8-8f11-4b20bd1fe52e --security-group default \

--key-name mykey selfservice-instance

openstack server list

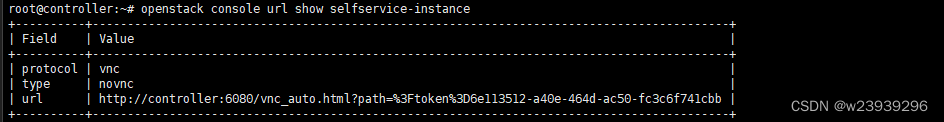

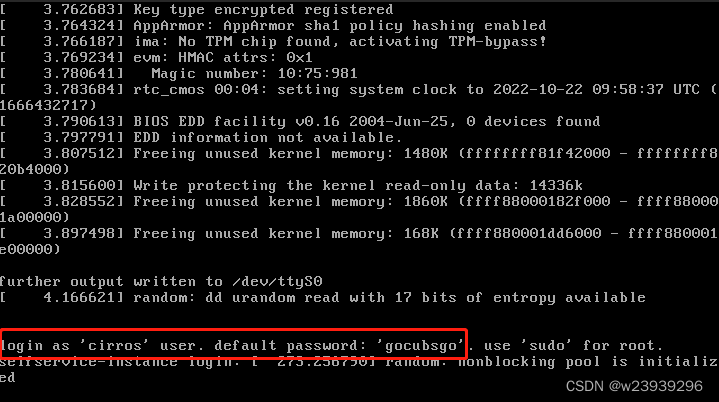

查看实例cirros密码

openstack console url show selfservice-instance

http://192.168.3.121:6080/vnc_auto.html?path=%3Ftoken%3D6e113512-a40e-464d-ac50-fc3c6f741cbb

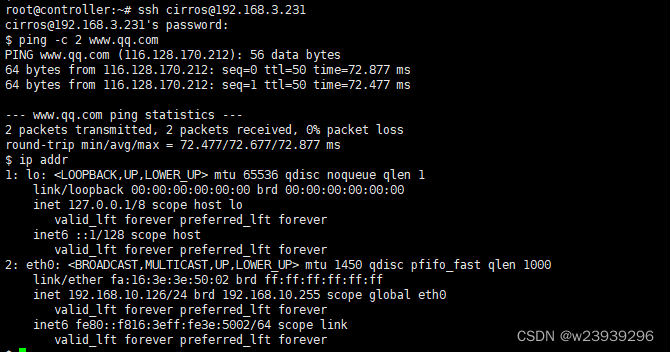

openstack floating ip create provider

openstack server add floating ip selfservice-instance 192.168.3.231

ssh cirros@192.168.3.231

http://192.168.3.121/horizon

myuser登录

12、安装cinder

controller

mysql

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

. admin-openrc

openstack user create --domain default --password-prompt cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

openstack endpoint create --region RegionOne \

volumev3 internal http://controller:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 admin http://controller:8776/v3/%\(project_id\)s

apt install cinder-api cinder-scheduler -y

vim /etc/cinder/cinder.conf

增加如下:

[database]

connection = mysql+pymysql://cinder:password@controller/cinder

[DEFAULT]

transport_url = rabbit://openstack:password@controller

auth_strategy = keystone

my_ip = 192.168.3.121

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = password

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

vim /etc/nova/nova.conf

增加如下:

[cinder]

os_region_name = RegionOne

su -s /bin/sh -c "cinder-manage db sync" cinder

service nova-api restart

service cinder-scheduler restart

service apache2 restart

block1

apt install lvm2 thin-provisioning-tools -y

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb

apt install cinder-volume tgt -y

vim /etc/cinder/cinder.conf

增加如下:

[database]

connection = mysql+pymysql://cinder:password@controller/cinder

[DEFAULT]

transport_url = rabbit://openstack:password@controller

auth_strategy = keystone

my_ip = 192.168.3.124

enabled_backends = lvm

glance_api_servers = http://controller:9292

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = password

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = tgtadm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

vim /etc/lvm/lvm.conf

增加如下:

devices {

filter = [ "a/sda/", "a/sdb/", "r/.*/"]

}

service tgt restart

service cinder-volume restart

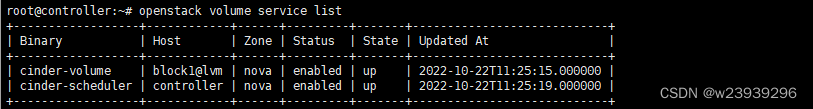

验证:

. admin-openrc

openstack volume service list

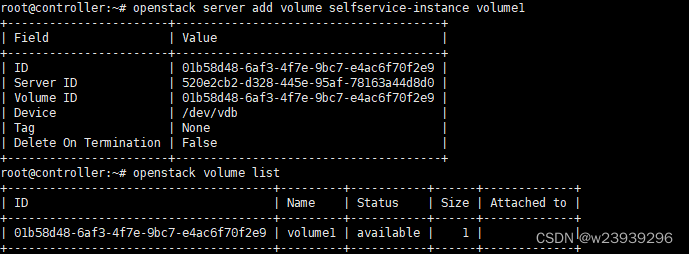

. demo-openrc

openstack volume create --size 1 volume1

openstack server add volume selfservice-instance volume1

openstack volume list

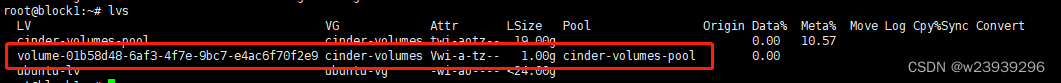

block1

lvs

13、安装swift

13、安装swift

source admin-openrc

openstack user create --domain default --password-prompt swift

openstack role add --project service --user swift admin

openstack service create --name swift \

--description "OpenStack Object Storage" object-store

openstack endpoint create --region RegionOne \

object-store public http://controller:8080/v1/AUTH_%\(project_id\)s

openstack endpoint create --region RegionOne \

object-store internal http://controller:8080/v1/AUTH_%\(project_id\)s

openstack endpoint create --region RegionOne \

object-store admin http://controller:8080/v1

apt-get install swift swift-proxy python3-swiftclient \

python3-keystoneclient python3-keystonemiddleware memcached -y

curl -o /etc/swift/proxy-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/proxy-server.conf-sample

vim /etc/swift/proxy-server.conf

增加如下:

[DEFAULT]

bind_port = 8080

user = swift

swift_dir = /etc/swift

[pipeline:main]

pipeline = catch_errors gatekeeper healthcheck proxy-logging cache container_sync bulk ratelimit authtoken keystoneauth container-quotas account-quotas slo dlo versioned_writes proxy-logging proxy-server

[app:proxy-server]

use = egg:swift#proxy

account_autocreate = True

[filter:keystoneauth]

use = egg:swift#keystoneauth

operator_roles = admin,user

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_id = default

user_domain_id = default

project_name = service

username = swift

password = password

delay_auth_decision = True

[filter:cache]

use = egg:swift#memcache

memcache_servers = controller:11211

object1 object2

apt-get install xfsprogs rsync -y

mkfs.xfs /dev/sdb

mkfs.xfs /dev/sdc

mkdir -p /srv/node/sdb

mkdir -p /srv/node/sdc

blkid

echo 'UUID="0330faea-35a7-4eac-8284-134e98f0e4a1" /srv/node/sdb xfs noatime 0 2' >> /etc/fstab

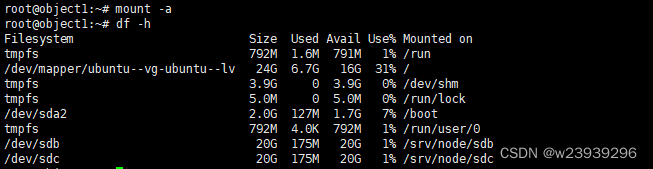

echo 'UUID="d5d99fb1-eb35-4d76-9efb-bb74c4249ba6" /srv/node/sdc xfs noatime 0 2' >> /etc/fstab

mount -a

df -h

object1

cat > /etc/rsyncd.conf << EOF

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 192.168.3.126

[account]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/object.lock

EOF

sed -i 's/RSYNC_ENABLE=false/RSYNC_ENABLE=true/g' /etc/default/rsync

service rsync start

service rsync status

object2

cat > /etc/rsyncd.conf << EOF

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 192.168.3.127

[account]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/object.lock

EOF

sed -i 's/RSYNC_ENABLE=false/RSYNC_ENABLE=true/g' /etc/default/rsync

service rsync start

service rsync status

object1 object2

apt-get install swift swift-account swift-container swift-object -y

curl -o /etc/swift/account-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/account-server.conf-sample

curl -o /etc/swift/container-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/container-server.conf-sample

curl -o /etc/swift/object-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/object-server.conf-sample

object1

vim /etc/swift/account-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.126

bind_port = 6202

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vim /etc/swift/container-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.126

bind_port = 6201

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon container-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vim /etc/swift/object-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.126

bind_port = 6200

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon object-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

recon_lock_path = /var/lock

chown -R swift:swift /srv/node

mkdir -p /var/cache/swift

chown -R root:swift /var/cache/swift

chmod -R 775 /var/cache/swift

object2

vim /etc/swift/account-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.127

bind_port = 6202

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vim /etc/swift/container-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.127

bind_port = 6201

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon container-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vim /etc/swift/object-server.conf

增加如下:

[DEFAULT]

bind_ip = 192.168.3.127

bind_port = 6200

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon object-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

recon_lock_path = /var/lock

chown -R swift:swift /srv/node

mkdir -p /var/cache/swift

chown -R root:swift /var/cache/swift

chmod -R 775 /var/cache/swift

controller

cd /etc/swift

swift-ring-builder account.builder create 10 3 1

swift-ring-builder account.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6202 --device sdb --weight 100

swift-ring-builder account.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6202 --device sdc --weight 100

swift-ring-builder account.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6202 --device sdb --weight 100

swift-ring-builder account.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6202 --device sdc --weight 100

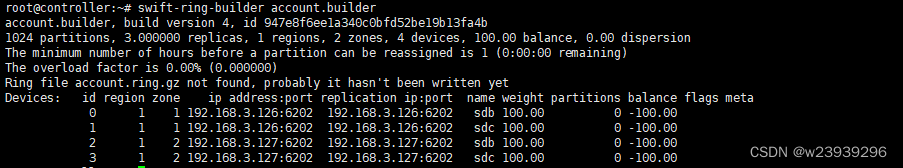

swift-ring-builder account.builder

swift-ring-builder account.builder rebalance

cd /etc/swift

swift-ring-builder container.builder create 10 3 1

swift-ring-builder container.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6201 --device sdb --weight 100

swift-ring-builder container.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6201 --device sdc --weight 100

swift-ring-builder container.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6201 --device sdb --weight 100

swift-ring-builder container.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6201 --device sdc --weight 100

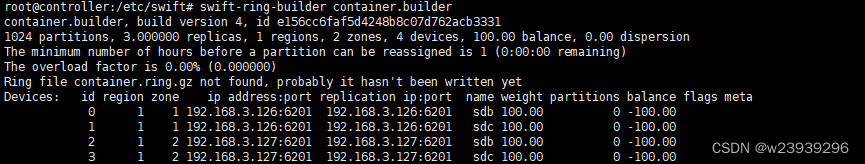

swift-ring-builder container.builder

swift-ring-builder container.builder rebalance

cd /etc/swift

swift-ring-builder object.builder create 10 3 1

swift-ring-builder object.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6200 --device sdb --weight 100

swift-ring-builder object.builder add \

--region 1 --zone 1 --ip 192.168.3.126 --port 6200 --device sdc --weight 100

swift-ring-builder object.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6200 --device sdb --weight 100

swift-ring-builder object.builder add \

--region 1 --zone 2 --ip 192.168.3.127 --port 6200 --device sdc --weight 100

swift-ring-builder container.builder

swift-ring-builder object.builder rebalance

scp account.ring.gz container.ring.gz object.ring.gz object1:/etc/swift

scp account.ring.gz container.ring.gz object.ring.gz object2:/etc/swift

curl -o /etc/swift/swift.conf \

https://opendev.org/openstack/swift/raw/branch/master/etc/swift.conf-sample

vim /etc/swift/swift.conf

修改如下:

[swift-hash]

...

swift_hash_path_suffix = password

swift_hash_path_prefix = password

scp /etc/swift/swift.conf object1:/etc/swift

scp /etc/swift/swift.conf object2:/etc/swift

chown -R root:swift /etc/swift

service memcached restart

service swift-proxy restart

object1 object2

curl -o /etc/swift/internal-client.conf https://opendev.org/openstack/swift/raw/branch/master/etc/internal-client.conf-sample

chown -R root:swift /etc/swift

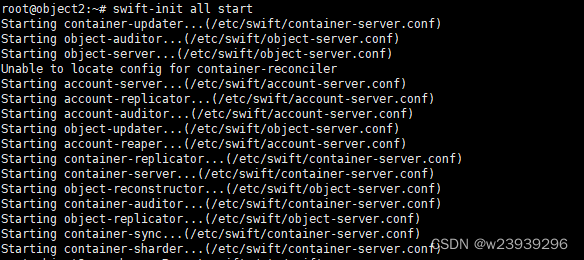

swift-init all start

service swift-account start

service swift-account-auditor start

service swift-account-reaper start

service swift-account-replicator start

service swift-container start

service swift-container-auditor start

service swift-container-reconciler start

service swift-container-replicator start

service swift-container-sharder start

service swift-container-sync start

service swift-container-updater start

service swift-object start

service swift-object-auditor start

service swift-object-reconstructor start

service swift-object-replicator start

service swift-object-updater start

验证

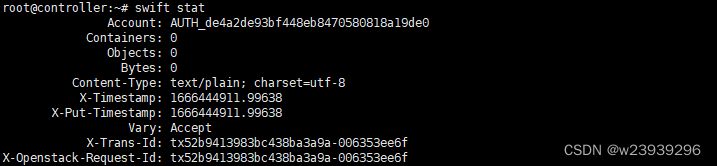

source admin-openrc

swift stat

上传与下载文件

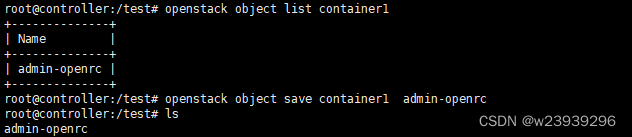

openstack container create container1

openstack object create container1 admin-openrc

mkdir /test

cd /test/

openstack object list container1

openstack object save container1 admin-openrc

ls

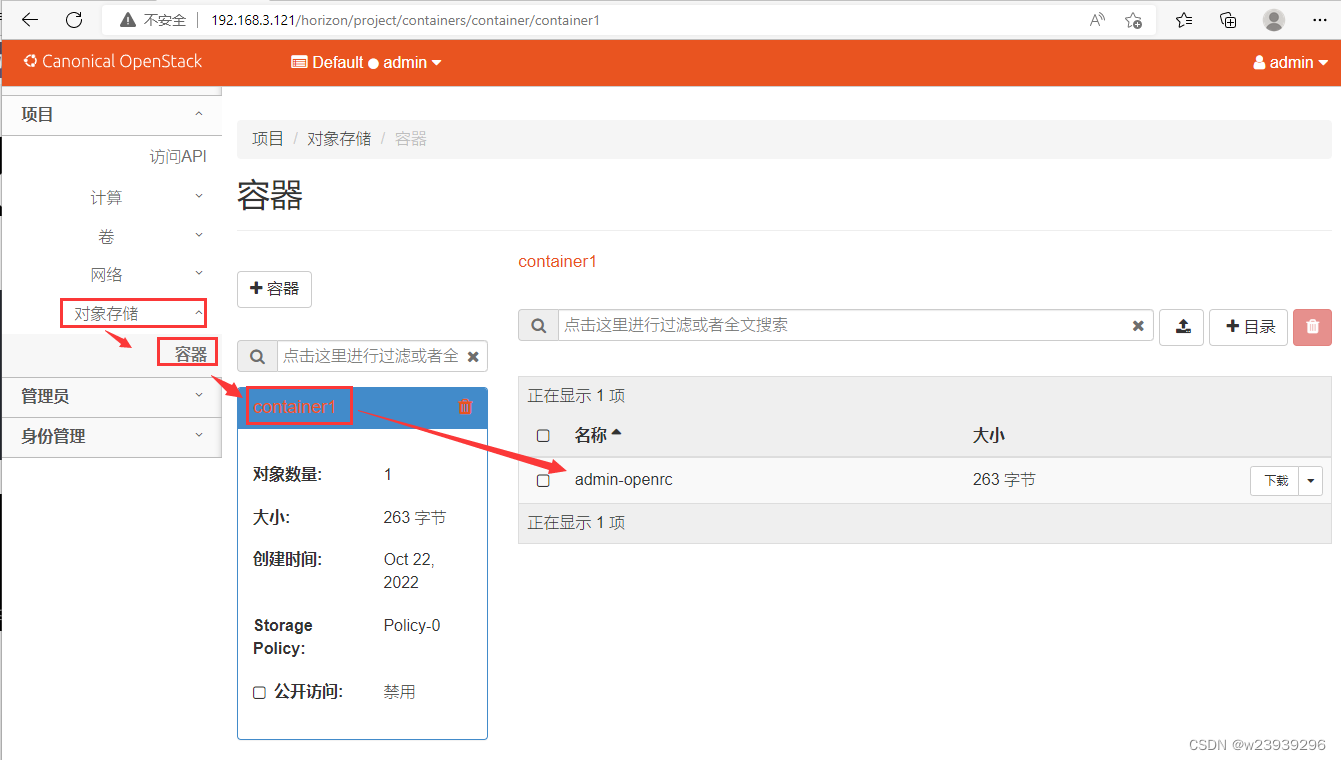

http://192.168.3.121/horizon

admin 登陆查看

14、安装Heat

mysql

CREATE DATABASE heat;

GRANT ALL PRIVILEGES ON heat.* TO 'heat'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON heat.* TO 'heat'@'%' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

exit

source admin-openrc

openstack user create --domain default --password-prompt heat

openstack role add --project service --user heat admin

openstack service create --name heat --description "Orchestration" orchestration

openstack service create --name heat-cfn --description "Orchestration" cloudformation

openstack endpoint create --region RegionOne \

orchestration public http://controller:8004/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

orchestration internal http://controller:8004/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

orchestration admin http://controller:8004/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

cloudformation public http://controller:8000/v1

openstack endpoint create --region RegionOne \

cloudformation internal http://controller:8000/v1

openstack endpoint create --region RegionOne \

cloudformation admin http://controller:8000/v1

openstack domain create --description "Stack projects and users" heat

openstack user create --domain heat --password-prompt heat_domain_admin

openstack role add --domain heat --user-domain heat --user heat_domain_admin admin

openstack role create heat_stack_owner

openstack role add --project myproject --user myuser heat_stack_owner

openstack role create heat_stack_user

apt-get install heat-api heat-api-cfn heat-engine -y

vim /etc/heat/heat.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

heat_metadata_server_url = http://controller:8000

heat_waitcondition_server_url = http://controller:8000/v1/waitcondition

stack_domain_admin = heat_domain_admin

stack_domain_admin_password = password

stack_user_domain_name = heat

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = heat

password = password

[database]

connection = mysql+pymysql://heat:password@controller/heat

[trustee]

auth_type = password

auth_url = http://controller:5000

username = heat

password = password

user_domain_name = default

[clients_keystone]

auth_uri = http://controller:5000

su -s /bin/sh -c "heat-manage db_sync" heat

service heat-api restart

service heat-api-cfn restart

service heat-engine restart

验证

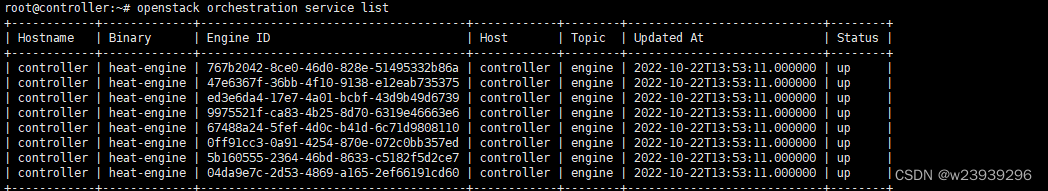

. admin-openrc

openstack orchestration service list

通过编排服务使用模板创建实例

cat > mytemp.yml << EOF

heat_template_version: 2021-04-16

description: Launch a basic instance with CirrOS image using the

``m1.tiny`` flavor, ``mykey`` key, and one network.

parameters:

NetID:

type: string

description: Network ID to use for the instance.

resources:

server:

type: OS::Nova::Server

properties:

image: cirros

flavor: m1.nano

key_name: mykey

networks:

- network: { get_param: NetID }

outputs:

instance_name:

description: Name of the instance.

value: { get_attr: [ server, name ] }

instance_ip:

description: IP address of the instance.

value: { get_attr: [ server, first_address ] }

EOF

. myuser-openrc

openstack network list

export NET_ID=$(openstack network list | awk '/ selfservice / { print $2 }')

openstack stack create -t mytemp.yml --parameter "NetID=$NET_ID" stack

openstack stack list

openstack stack output show --all stack

openstack server list

删除

openstack stack delete --yes stack

15、安装manila

controller

mysql

CREATE DATABASE manila;

GRANT ALL PRIVILEGES ON manila.* TO 'manila'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON manila.* TO 'manila'@'%' IDENTIFIED BY 'password';

exit

source admin-openrc

openstack user create --domain default --password-prompt manila

openstack role add --project service --user manila admin

openstack service create --name manila \

--description "OpenStack Shared File Systems" share

openstack service create --name manilav2 \

--description "OpenStack Shared File Systems V2" sharev2

openstack endpoint create --region RegionOne \

share public http://controller:8786/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

share internal http://controller:8786/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

share admin http://controller:8786/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

sharev2 public http://controller:8786/v2

openstack endpoint create --region RegionOne \

sharev2 internal http://controller:8786/v2

openstack endpoint create --region RegionOne \

sharev2 admin http://controller:8786/v2

apt-get install manila-api manila-scheduler python3-manilaclient -y

vim /etc/manila/manila.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

default_share_type = default_share_type

share_name_template = share-%s

rootwrap_config = /etc/manila/rootwrap.conf

api_paste_config = /etc/manila/api-paste.ini

auth_strategy = keystone

my_ip = 192.168.3.121

[database]

connection = mysql+pymysql://manila:password@controller/manila

[keystone_authtoken]

memcached_servers = controller:11211

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = manila

password = password

[oslo_concurrency]

lock_path = /var/lock/manila

su -s /bin/sh -c "manila-manage db sync" manila

service manila-scheduler restart

service manila-api restart

rm -f /var/lib/manila/manila.sqlite

block1

apt-get install manila-share python3-pymysql -y

vim /etc/manila/manila.conf

增加如下:

[database]

connection = mysql+pymysql://manila:password@controller/manila

[DEFAULT]

transport_url = rabbit://openstack:password@controller

default_share_type = default_share_type

rootwrap_config = /etc/manila/rootwrap.conf

auth_strategy = keystone

my_ip = 192.168.3.124

[keystone_authtoken]

memcached_servers = controller:11211

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = manila

password = password

[oslo_concurrency]

lock_path = /var/lib/manila/tmp

apt-get install lvm2 nfs-kernel-server -y

vim /etc/lvm/lvm.conf

先注释

filter = [ "a/sda/", "a/sdb/", "r/.*/"]

pvcreate /dev/sdc

vgcreate manila-volumes /dev/sdc

vim /etc/lvm/lvm.conf

修改为

filter = [ "a/sda/", "a/sdb/", "a/sdc", "r/.*/"]

vim /etc/manila/manila.conf

增加如下:

[DEFAULT]

enabled_share_backends = lvm

enabled_share_protocols = NFS

[lvm]

share_backend_name = LVM

share_driver = manila.share.drivers.lvm.LVMShareDriver

driver_handles_share_servers = False

lvm_share_volume_group = manila-volumes

lvm_share_export_ips = 192.168.3.124

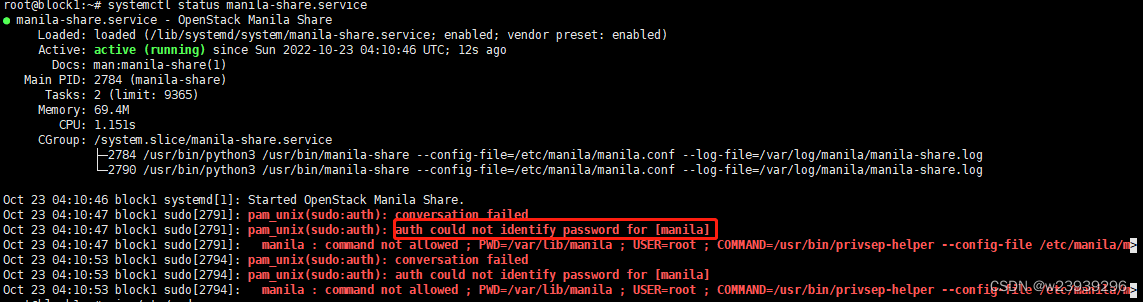

若报错

修改

vim /etc/sudoers.d/manila_sudoers

修改如下

manila ALL = (root) NOPASSWD:ALL

service manila-share restart

验证:

controller

source admin-openrc

manila service-list

16、安装ceilometer

mysql

CREATE DATABASE gnocchi;

GRANT ALL PRIVILEGES ON gnocchi.* TO 'gnocchi'@'localhost' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON gnocchi.* TO 'gnocchi'@'%' IDENTIFIED BY 'password';

exit

. admin-openrc

openstack user create --domain default --password-prompt ceilometer

openstack role add --project service --user ceilometer admin

openstack service create --name ceilometer --description "Telemetry" metering

openstack user create --domain default --password-prompt gnocchi

openstack service create --name gnocchi --description "Metric Service" metric

openstack role add --project service --user gnocchi admin

openstack endpoint create --region RegionOne \

metric public http://controller:8041

openstack endpoint create --region RegionOne \

metric internal http://controller:8041

openstack endpoint create --region RegionOne \

metric admin http://controller:8041

apt-get install gnocchi-api gnocchi-metricd python3-gnocchiclient -y

apt-get install uwsgi-plugin-python3 uwsgi -y

vim /etc/gnocchi/gnocchi.conf

增加如下:

[api]

auth_mode = keystone

port = 8041

uwsgi_mode = http-socket

[keystone_authtoken]

auth_type = password

auth_url = http://controller:5000/v3

project_domain_name = Default

user_domain_name = Default

project_name = service

username = gnocchi

password = password

interface = internalURL

region_name = RegionOne

[indexer]

url = mysql+pymysql://gnocchi:password@controller/gnocchi

gnocchi-upgrade

service gnocchi-api restart

service gnocchi-metricd restart

apt-get install ceilometer-agent-notification ceilometer-agent-central -y

vim /etc/ceilometer/ceilometer.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

[service_credentials]

auth_type = password

auth_url = http://controller:5000/v3

project_domain_id = default

user_domain_id = default

project_name = service

username = ceilometer

password = password

interface = internalURL

region_name = RegionOne

ceilometer-upgrade

service ceilometer-agent-central restart

service ceilometer-agent-notification restart

vim /etc/glance/glance-api.conf

[DEFAULT]

transport_url = rabbit://openstack:password@controller

[oslo_messaging_notifications]

driver = messagingv2

service glance-api restart

vim /etc/heat/heat.conf

[oslo_messaging_notifications]

driver = messagingv2

service heat-api restart

service heat-api-cfn restart

service heat-engine restart

vim /etc/neutron/neutron.conf

[oslo_messaging_notifications]

driver = messagingv2

service neutron-server restart

compute1 compute2

apt-get install ceilometer-agent-compute -y

vim /etc/ceilometer/ceilometer.conf

增加如下:

[DEFAULT]

transport_url = rabbit://openstack:password@controller

[service_credentials]

auth_url = http://controller:5000

project_domain_id = default

user_domain_id = default

auth_type = password

username = ceilometer

project_name = service

password = password

interface = internalURL

region_name = RegionOne

vim /etc/nova/nova.conf

[DEFAULT]

instance_usage_audit = True

instance_usage_audit_period = hour

[notifications]

notify_on_state_change = vm_and_task_state

[oslo_messaging_notifications]

driver = messagingv2

service ceilometer-agent-compute restart

service nova-compute restart

验证

http://192.168.3.121/horizon myuser登录

controller

source admin-openrc

gnocchi resource list --type image

gnocchi resource show fdac81ae-0785-41ad-b7df-12cae0db5970

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)