数据管道设计

遵循典型约定,我们使用Dataset和DataLoader对多个worker进行数据加载。数据集返回与模型的forward方法的参数相对应的数据项字典。由于目标检测中的数据可能不相同大小(图像大小,gt box大小等),我们在MMCV中引入了一个新的DataContainer类型,以帮助收集和分发不同大小的数据。查看这里了解更多细节。

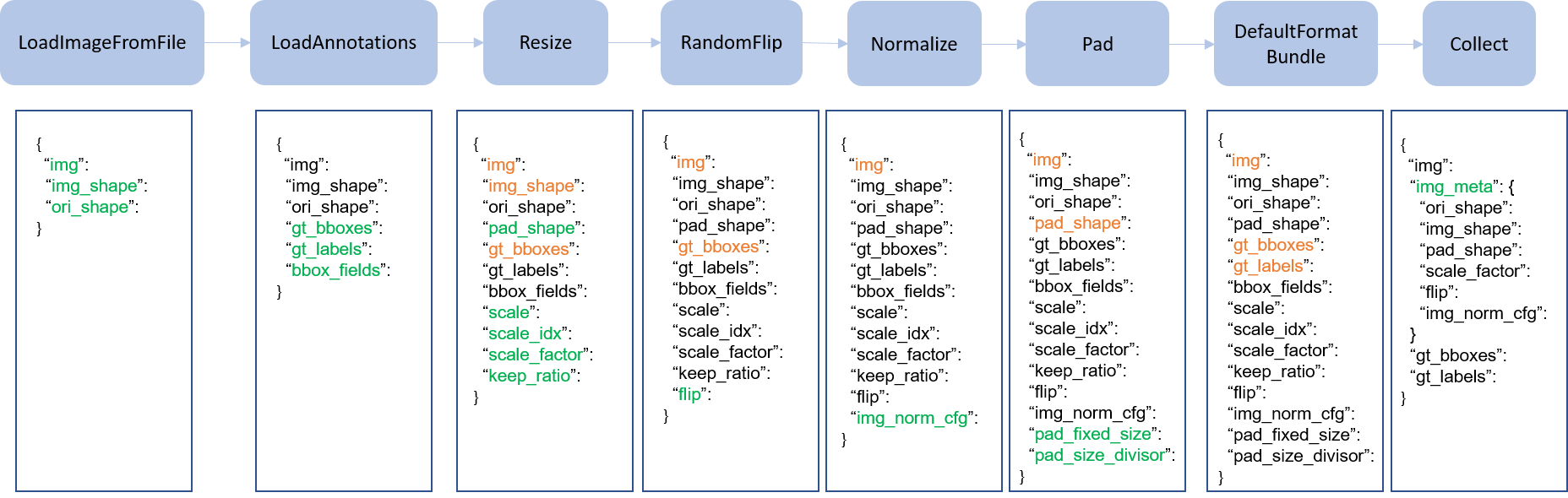

对数据准备管道和数据集进行分解。数据集通常定义如何处理注释,数据管道定义准备数据字典的所有步骤。管道由一系列操作组成。每个操作都接受一个字典作为输入,并输出一个字典用于下一个转换。

我们在下图中展示了一个经典的管道。蓝色的块是管道操作。随着管道的运行,每个操作符都可以向结果字典添加新的键(标记为绿色)或更新现有的键(标记为橙色)。

1、pipeline example for Faster R-CNN.

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

对于每个操作,我们列出了添加/更新/删除的相关dict字段。

预处理

Resize

添加:scale,scale_idx,pad_shape,scale_factor,keep_ratio

更新:img,img_shape,* bbox_fields,* mask_fields,* seg_fields

RandomFlip

添加:翻转

更新:img,* bbox_fields,* mask_fields,* seg_fields

Pad

添加:pad_fixed_size,pad_size_divisor

更新:img,pad_shape,* mask_fields,* seg_fields

RandomCrop

更新:img,pad_shape,gt_bboxes,gt_labels,gt_masks,* bbox_fields

Normalize

添加:img_norm_cfg

更新:img

SegRescale

更新:gt_semantic_seg

PhotoMetricDistortion

更新:img

Expand

更新:img,gt_bboxes

MinIoURandomCrop

更新:img,gt_bboxes,gt_labels

Corrupt

更新:img

格式化

ToTensor

更新:由指定keys。

ImageToTensor

更新:由指定keys。

Transpose

更新:由指定keys。

ToDataContainer

更新:由指定fields。

DefaultFormatBundle

更新:img,投标,gt_bboxes,gt_bboxes_ignore,gt_labels,gt_masks,gt_semantic_seg

Collect

添加:img_meta(img_meta的键由指定meta_keys)

删除:除由所指定的键外的所有其他键 keys

测试时间增加

MultiScaleFlipAug

数据加载

数据加载

LoadImageFromFile

添加:img,img_shape,ori_shape

LoadAnnotations

添加:gt_bboxes,gt_bboxes_ignore,gt_labels,gt_masks,gt_semantic_seg,bbox_fields,mask_fields

LoadProposals

添加:建议

预处理

Resize(缩放)

add: scale, scale_idx, pad_shape, scale_factor, keep_ratio

update: img, img_shape, *bbox_fields, *mask_fields, *seg_fields

RandomFlip(随机镜像)

add: flip

update: img, *bbox_fields, *mask_fields, *seg_fields

Pad(填充)

add: pad_fixed_size, pad_size_divisor

update: img, pad_shape, *mask_fields, *seg_fields

RandomCrop(随机裁剪)

update: img, pad_shape, gt_bboxes, gt_labels, gt_masks, *bbox_fields

Normalize(归一化)

add: img_norm_cfg

update: img

SegRescale

update: gt_semantic_seg

PhotoMetricDistortion

update: img

Expand

update: img, gt_bboxes

MinIoURandomCrop

update: img, gt_bboxes, gt_labels

Corrupt

update: img

格式化

ToTensor

update: specified by keys.

ImageToTensor

update: specified by keys.

Transpose

update: specified by keys.

ToDataContainer

update: specified by fields.

DefaultFormatBundle

update: img, proposals, gt_bboxes, gt_bboxes_ignore, gt_labels, gt_masks, gt_semantic_seg

Collect

add: img_meta (the keys of img_meta is specified by meta_keys)

remove: all other keys except for those specified by keys

扩展和使用自定义管道

1、"在文件中写入一个新的管道,例如my_pipeline.py。它接受一个字典作为输入并返回一个字典。

import random

from mmdet.datasets import PIPELINES

@PIPELINES.register_module()

class MyTransform:

"""Add your transform

Args:

p (float): Probability of shifts. Default 0.5.

"""

def __init__(self, p=0.5):

self.p = p

def __call__(self, results):

if random.random() > self.p:

results['dummy'] = True

return results

2. 在配置文件中导入并使用管道。确保导入是相对于train脚本所在的位置。

custom_imports = dict(imports=['path.to.my_pipeline'], allow_failed_imports=False)

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='MyTransform', p=0.2),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

]

3.可视化您的增强管道的输出

要可视化您的agementpipeline的输出,tools/misc/browse_data .py可以帮助用户可视化地浏览检测数据集(包括图像和边界框注释),或者将图像保存到指定目录。更多细节可以参考useful_tools

为什么图像和Bbox需要进行数据增强

答:因为数据多了就可以尽可能多的学习到图像中的不变性,学习到的不变性越多那么模型的泛化能力越强。

但是输入到CNN中的图像为什么不具有平移不变性?如何去解决?下面链接有专门的解析:

输入到CNN中的图像为什么不具有平移不变性?如何去解决?

MMdetection 数据增强

MMDetection中,数据增强包括两部分:(源码解析)

新版MMDetection新添加用Albu数据库对图像进行增强的代码,位置在mmdetection/configs/albu_example/mask_rcnn_r50_fpn_1x.py,是基于图像分割的,用于目标检测的代码更新如下

1、引入albumentations数据增强库进行增强

目标检测tricks:Ablu数据库增强

重点部分代码:

albu_train_transforms = [

# dict(

# type='HorizontalFlip',

# p=0.5),

# dict(

# type='VerticalFlip',

# p=0.5),

dict(

type='ShiftScaleRotate',

shift_limit=0.0625,

scale_limit=0.0,

rotate_limit=180,

interpolation=1,

p=0.5),

# dict(

# type='RandomBrightnessContrast',

# brightness_limit=[0.1, 0.3],

# contrast_limit=[0.1, 0.3],

# p=0.2),

# dict(

# type='OneOf',

# transforms=[

# dict(

# type='RGBShift',

# r_shift_limit=10,

# g_shift_limit=10,

# b_shift_limit=10,

# p=1.0),

# dict(

# type='HueSaturationValue',

# hue_shift_limit=20,

# sat_shift_limit=30,

# val_shift_limit=20,

# p=1.0)

# ],

# p=0.1),

# # dict(type='JpegCompression', quality_lower=85, quality_upper=95, p=0.2),

#

# dict(type='ChannelShuffle', p=0.1),

# dict(

# type='OneOf',

# transforms=[

# dict(type='Blur', blur_limit=3, p=1.0),

# dict(type='MedianBlur', blur_limit=3, p=1.0)

# ],

# p=0.1),

]

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=[(4096, 800), (4096, 1200)], keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Pad', size_divisor=32),

'''

Args:

transforms (list[dict]): A list of albu transformations , albu transformations 列表

bbox_params (dict): Bbox_params for albumentation `Compose`

keymap (dict): Contains {'input key':'albumentation-style key'}

skip_img_without_anno (bool): Whether to skip the image if no ann left

after aug 是否跳过图像,如果没有ann离开后aug

'''

dict(

type='Albu',

transforms=albu_train_transforms,

bbox_params=dict(

type='BboxParams',

format='pascal_voc',

label_fields=['gt_labels'],

min_visibility=0.0,

filter_lost_elements=True),

keymap={

'img': 'image',

'gt_bboxes': 'bboxes'

},

update_pad_shape=False,

skip_img_without_anno=True),

dict(type='Normalize', **img_norm_cfg),

dict(type='DefaultFormatBundle'),

dict(

type='Collect',

keys=['img', 'gt_bboxes', 'gt_labels'],

meta_keys=('filename', 'ori_shape', 'img_shape', 'img_norm_cfg',

'pad_shape', 'scale_factor')

)

]

全部代码

# model settings

model = dict(

type='CascadeRCNN',

num_stages=3,

pretrained='torchvision://resnet50',

backbone=dict(

type='ResNet',

depth=50,

num_stages=4,

out_indices=(0, 1, 2, 3),

frozen_stages=1,

style='pytorch'),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

num_outs=5),

rpn_head=dict(

type='RPNHead',

in_channels=256,

feat_channels=256,

anchor_scales=[8],

anchor_ratios=[0.5, 1.0, 2.0],

anchor_strides=[4, 8, 16, 32, 64],

target_means=[.0, .0, .0, .0],

target_stds=[1.0, 1.0, 1.0, 1.0],

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0), # CrossEntropyLoss/FocalLoss

loss_bbox=dict(type='SmoothL1Loss', beta=1.0 / 9.0, loss_weight=1.0)),

bbox_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', out_size=7, sample_num=2),

out_channels=256,

featmap_strides=[4, 8, 16, 32]),

bbox_head=[

dict(

type='SharedFCBBoxHead',

num_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=8,

target_means=[0., 0., 0., 0.],

target_stds=[0.1, 0.1, 0.2, 0.2],

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)),

dict(

type='SharedFCBBoxHead',

num_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=8,

target_means=[0., 0., 0., 0.],

target_stds=[0.05, 0.05, 0.1, 0.1],

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)),

dict(

type='SharedFCBBoxHead',

num_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=8,

target_means=[0., 0., 0., 0.],

target_stds=[0.033, 0.033, 0.067, 0.067],

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0))

])

# model training and testing settings

train_cfg = dict(

rpn=dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.7,

neg_iou_thr=0.3,

min_pos_iou=0.3,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=256,

pos_fraction=0.5,

neg_pos_ub=-1,

add_gt_as_proposals=False),

allowed_border=0,

pos_weight=-1,

debug=False),

rpn_proposal=dict(

nms_across_levels=False,

nms_pre=2000,

nms_post=2000,

max_num=2000,

nms_thr=0.7,

min_bbox_size=0),

rcnn=[

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.5,

neg_iou_thr=0.5,

min_pos_iou=0.5,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

pos_weight=-1,

debug=False),

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.6,

neg_iou_thr=0.6,

min_pos_iou=0.6,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

pos_weight=-1,

debug=False),

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.7,

neg_iou_thr=0.7,

min_pos_iou=0.7,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

pos_weight=-1,

debug=False)

],

stage_loss_weights=[1, 0.5, 0.25])

test_cfg = dict(

rpn=dict(

nms_across_levels=False,

nms_pre=1000,

nms_post=1000,

max_num=1000,

nms_thr=0.7,

min_bbox_size=0),

rcnn=dict(

score_thr=0.01,

nms=dict(type='nms', iou_thr=0.5), max_per_img=100),

keep_all_stages=False)

# dataset

dataset_type = 'CocoDataset'

data_root = './data/coco/'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

albu_train_transforms = [

# dict(

# type='HorizontalFlip',

# p=0.5),

# dict(

# type='VerticalFlip',

# p=0.5),

dict(

type='ShiftScaleRotate',

shift_limit=0.0625,

scale_limit=0.0,

rotate_limit=180,

interpolation=1,

p=0.5),

# dict(

# type='RandomBrightnessContrast',

# brightness_limit=[0.1, 0.3],

# contrast_limit=[0.1, 0.3],

# p=0.2),

# dict(

# type='OneOf',

# transforms=[

# dict(

# type='RGBShift',

# r_shift_limit=10,

# g_shift_limit=10,

# b_shift_limit=10,

# p=1.0),

# dict(

# type='HueSaturationValue',

# hue_shift_limit=20,

# sat_shift_limit=30,

# val_shift_limit=20,

# p=1.0)

# ],

# p=0.1),

# # dict(type='JpegCompression', quality_lower=85, quality_upper=95, p=0.2),

#

# dict(type='ChannelShuffle', p=0.1),

# dict(

# type='OneOf',

# transforms=[

# dict(type='Blur', blur_limit=3, p=1.0),

# dict(type='MedianBlur', blur_limit=3, p=1.0)

# ],

# p=0.1),

]

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=[(4096, 800), (4096, 1200)], keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Pad', size_divisor=32),

dict(

type='Albu',

transforms=albu_train_transforms,

bbox_params=dict(

type='BboxParams',

format='pascal_voc',

label_fields=['gt_labels'],

min_visibility=0.0,

filter_lost_elements=True),

keymap={

'img': 'image',

'gt_bboxes': 'bboxes'

},

update_pad_shape=False,

skip_img_without_anno=True),

dict(type='Normalize', **img_norm_cfg),

dict(type='DefaultFormatBundle'),

dict(

type='Collect',

keys=['img', 'gt_bboxes', 'gt_labels'],

meta_keys=('filename', 'ori_shape', 'img_shape', 'img_norm_cfg',

'pad_shape', 'scale_factor')

)

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale = (4096, 1000),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

data = dict(

imgs_per_gpu=2,

workers_per_gpu=3,

train=dict(

type=dataset_type,

ann_file=data_root + 'first_pinggai/bottle/annotations/instances_train2017.json',

img_prefix=data_root + 'first_pinggai/bottle/images/train2017/',

pipeline=train_pipeline),

val=dict(

type=dataset_type,

ann_file=data_root + 'first_pinggai/bottle/annotations/instances_val2017.json',

img_prefix=data_root + 'first_pinggai/bottle/images/val2017/',

pipeline=test_pipeline),

test=dict(

type=dataset_type,

ann_file=data_root + 'first_pinggai/bottle/annotations/instances_val2017.json',

img_prefix=data_root + 'first_pinggai/bottle/images/val2017/',

pipeline=test_pipeline)

)

# optimizer

optimizer = dict(type='SGD', lr= 0.005, momentum=0.9, weight_decay=0.0001)

optimizer_config = dict(grad_clip=dict(max_norm=35, norm_type=2))

# learning policy

lr_config = dict(

policy='step',

warmup='linear',

warmup_iters=500,

warmup_ratio=1.0 / 3,

step=[8, 11])

checkpoint_config = dict(interval=1)

# yapf:disable

log_config = dict(

interval=50,

hooks=[

dict(type='TextLoggerHook'),

# dict(type='TensorboardL0oggerHook')

])

# yapf:enable

# runtime settings

total_epochs = 50

dist_params = dict(backend='nccl')

log_level = 'INFO'

work_dir = './work_dirs/HRo_cascade50_pinggai_1400'

load_from = './checkpoints/cascade_rcnn_r50_fpn_1x_20190501-3b6211ab.pth'

resume_from = None

workflow = [('train', 1)]

这部分代码需要看开头"注意"部分解析,防止修改代码后运行报错。

需要修改两个部分代码:

mmdet/datasets/pipelines/transforms.py,修改后的源码如下:

transforms.py

import inspect

import albumentations

import mmcv

import numpy as np

from albumentations import Compose

from imagecorruptions import corrupt

from numpy import random

from mmdet.core.evaluation.bbox_overlaps import bbox_overlaps

from ..registry import PIPELINES

@PIPELINES.register_module

class Resize(object):

"""Resize images & bbox & mask.

This transform resizes the input image to some scale. Bboxes and masks are

then resized with the same scale factor. If the input dict contains the key

"scale", then the scale in the input dict is used, otherwise the specified

scale in the init method is used.

`img_scale` can either be a tuple (single-scale) or a list of tuple

(multi-scale). There are 3 multiscale modes:

- `ratio_range` is not None: randomly sample a ratio from the ratio range

and multiply it with the image scale.

- `ratio_range` is None and `multiscale_mode` == "range": randomly sample a

scale from the a range.

- `ratio_range` is None and `multiscale_mode` == "value": randomly sample a

scale from multiple scales.

Args:

img_scale (tuple or list[tuple]): Images scales for resizing.

multiscale_mode (str): Either "range" or "value".

ratio_range (tuple[float]): (min_ratio, max_ratio)

keep_ratio (bool): Whether to keep the aspect ratio when resizing the

image.

"""

def __init__(self,

img_scale=None,

multiscale_mode='range',

ratio_range=None,

keep_ratio=True):

if img_scale is None:

self.img_scale = None

else:

if isinstance(img_scale, list):

self.img_scale = img_scale

else:

self.img_scale = [img_scale]

assert mmcv.is_list_of(self.img_scale, tuple)

if ratio_range is not None:

# mode 1: given a scale and a range of image ratio

assert len(self.img_scale) == 1

else:

# mode 2: given multiple scales or a range of scales

assert multiscale_mode in ['value', 'range']

self.multiscale_mode = multiscale_mode

self.ratio_range = ratio_range

self.keep_ratio = keep_ratio

@staticmethod

def random_select(img_scales):

assert mmcv.is_list_of(img_scales, tuple)

scale_idx = np.random.randint(len(img_scales))

img_scale = img_scales[scale_idx]

return img_scale, scale_idx

@staticmethod

def random_sample(img_scales):

assert mmcv.is_list_of(img_scales, tuple) and len(img_scales) == 2

img_scale_long = [max(s) for s in img_scales]

img_scale_short = [min(s) for s in img_scales]

long_edge = np.random.randint(

min(img_scale_long),

max(img_scale_long) + 1)

short_edge = np.random.randint(

min(img_scale_short),

max(img_scale_short) + 1)

img_scale = (long_edge, short_edge)

return img_scale, None

@staticmethod

def random_sample_ratio(img_scale, ratio_range):

assert isinstance(img_scale, tuple) and len(img_scale) == 2

min_ratio, max_ratio = ratio_range

assert min_ratio <= max_ratio

ratio = np.random.random_sample() * (max_ratio - min_ratio) + min_ratio

scale = int(img_scale[0] * ratio), int(img_scale[1] * ratio)

return scale, None

def _random_scale(self, results):

if self.ratio_range is not None:

scale, scale_idx = self.random_sample_ratio(

self.img_scale[0], self.ratio_range)

elif len(self.img_scale) == 1:

scale, scale_idx = self.img_scale[0], 0

elif self.multiscale_mode == 'range':

scale, scale_idx = self.random_sample(self.img_scale)

elif self.multiscale_mode == 'value':

scale, scale_idx = self.random_select(self.img_scale)

else:

raise NotImplementedError

results['scale'] = scale

results['scale_idx'] = scale_idx

def _resize_img(self, results):

if results['concat']:

img_raw, img_temp = results['img'][:, :, :3], results['img'][:, :, 3:]

if self.keep_ratio:

img_raw, scale_factor = mmcv.imrescale(

img_raw, results['scale'], return_scale=True)

img_temp, scale_factor = mmcv.imrescale(

img_temp, results['scale'], return_scale=True)

else:

img_raw, w_scale, h_scale = mmcv.imresize(

img_raw, results['scale'], return_scale=True)

img_temp, w_scale, h_scale = mmcv.imresize(

img_temp, results['scale'], return_scale=True)

scale_factor = np.array([w_scale, h_scale, w_scale, h_scale],

dtype=np.float32)

results['img'] = np.concatenate([img_raw, img_temp], axis=2)

results['img_shape'] = img_raw.shape

results['pad_shape'] = img_raw.shape # in case that there is no padding

results['scale_factor'] = scale_factor

results['keep_ratio'] = self.keep_ratio

else:

if self.keep_ratio:

img, scale_factor = mmcv.imrescale(

results['img'], results['scale'], return_scale=True)

else:

img, w_scale, h_scale = mmcv.imresize(

results['img'], results['scale'], return_scale=True)

scale_factor = np.array([w_scale, h_scale, w_scale, h_scale],

dtype=np.float32)

results['img'] = img

results['img_shape'] = img.shape

results['pad_shape'] = img.shape # in case that there is no padding

results['scale_factor'] = scale_factor

results['keep_ratio'] = self.keep_ratio

def _resize_bboxes(self, results):

img_shape = results['img_shape']

for key in results.get('bbox_fields', []):

bboxes = results[key] * results['scale_factor']

bboxes[:, 0::2] = np.clip(bboxes[:, 0::2], 0, img_shape[1] - 1)

bboxes[:, 1::2] = np.clip(bboxes[:, 1::2], 0, img_shape[0] - 1)

results[key] = bboxes

def _resize_masks(self, results):

for key in results.get('mask_fields', []):

if results[key] is None:

continue

if self.keep_ratio:

masks = [

mmcv.imrescale(

mask, results['scale_factor'], interpolation='nearest')

for mask in results[key]

]

else:

mask_size = (results['img_shape'][1], results['img_shape'][0])

masks = [

mmcv.imresize(mask, mask_size, interpolation='nearest')

for mask in results[key]

]

results[key] = masks

def _resize_seg(self, results):

for key in results.get('seg_fields', []):

if self.keep_ratio:

gt_seg = mmcv.imrescale(

results[key], results['scale'], interpolation='nearest')

else:

gt_seg = mmcv.imresize(

results[key], results['scale'], interpolation='nearest')

results['gt_semantic_seg'] = gt_seg

def __call__(self, results):

if 'scale' not in results:

self._random_scale(results)

self._resize_img(results)

self._resize_bboxes(results)

self._resize_masks(results)

self._resize_seg(results)

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += ('(img_scale={}, multiscale_mode={}, ratio_range={}, '

'keep_ratio={})').format(self.img_scale,

self.multiscale_mode,

self.ratio_range,

self.keep_ratio)

return repr_str

@PIPELINES.register_module

class RandomFlip(object):

"""Flip the image & bbox & mask.

If the input dict contains the key "flip", then the flag will be used,

otherwise it will be randomly decided by a ratio specified in the init

method.

Args:

flip_ratio (float, optional): The flipping probability.

"""

def __init__(self, flip_ratio=None, direction='horizontal'):

self.flip_ratio = flip_ratio

self.direction = direction

if flip_ratio is not None:

assert flip_ratio >= 0 and flip_ratio <= 1

assert direction in ['horizontal', 'vertical']

def bbox_flip(self, bboxes, img_shape, direction):

"""Flip bboxes horizontally.

Args:

bboxes(ndarray): shape (..., 4*k)

img_shape(tuple): (height, width)

"""

assert bboxes.shape[-1] % 4 == 0

flipped = bboxes.copy()

if direction == 'horizontal':

w = img_shape[1]

flipped[..., 0::4] = w - bboxes[..., 2::4] - 1

flipped[..., 2::4] = w - bboxes[..., 0::4] - 1

elif direction == 'vertical':

h = img_shape[0]

flipped[..., 1::4] = h - bboxes[..., 3::4] - 1

flipped[..., 3::4] = h - bboxes[..., 1::4] - 1

else:

raise ValueError(

'Invalid flipping direction "{}"'.format(direction))

return flipped

def __call__(self, results):

if 'flip' not in results:

flip = True if np.random.rand() < self.flip_ratio else False

results['flip'] = flip

if 'flip_direction' not in results:

results['flip_direction'] = self.direction

if results['flip']:

# flip image

results['img'] = mmcv.imflip(

results['img'], direction=results['flip_direction'])

# flip bboxes

for key in results.get('bbox_fields', []):

results[key] = self.bbox_flip(results[key],

results['img_shape'],

results['flip_direction'])

# flip masks

for key in results.get('mask_fields', []):

results[key] = [

mmcv.imflip(mask, direction=results['flip_direction'])

for mask in results[key]

]

# flip segs

for key in results.get('seg_fields', []):

results[key] = mmcv.imflip(

results[key], direction=results['flip_direction'])

return results

def __repr__(self):

return self.__class__.__name__ + '(flip_ratio={})'.format(

self.flip_ratio)

@PIPELINES.register_module

class Pad(object):

"""Pad the image & mask.

There are two padding modes: (1) pad to a fixed size and (2) pad to the

minimum size that is divisible by some number.

Args:

size (tuple, optional): Fixed padding size.

size_divisor (int, optional): The divisor of padded size.

pad_val (float, optional): Padding value, 0 by default.

"""

def __init__(self, size=None, size_divisor=None, pad_val=0):

self.size = size

self.size_divisor = size_divisor

self.pad_val = pad_val

# only one of size and size_divisor should be valid

assert size is not None or size_divisor is not None

assert size is None or size_divisor is None

def _pad_img(self, results):

if results['concat']:

img_raw, img_temp = results['img'][:, :, :3], results['img'][:, :, 3:]

if self.size is not None:

padded_img_raw = mmcv.impad(img_raw, self.size)

padded_img_temp = mmcv.impad(img_temp, self.size)

elif self.size_divisor is not None:

padded_img_raw = mmcv.impad_to_multiple(

img_raw, self.size_divisor, pad_val=self.pad_val)

padded_img_temp = mmcv.impad_to_multiple(

img_temp, self.size_divisor, pad_val=self.pad_val)

results['img'] = np.concatenate([padded_img_raw, padded_img_temp], axis=2)

results['pad_shape'] = padded_img_raw.shape

results['pad_fixed_size'] = self.size

results['pad_size_divisor'] = self.size_divisor

else:

if self.size is not None:

padded_img = mmcv.impad(results['img'], self.size)

elif self.size_divisor is not None:

padded_img = mmcv.impad_to_multiple(

results['img'], self.size_divisor, pad_val=self.pad_val)

results['img'] = padded_img

results['pad_shape'] = padded_img.shape

results['pad_fixed_size'] = self.size

results['pad_size_divisor'] = self.size_divisor

def _pad_masks(self, results):

pad_shape = results['pad_shape'][:2]

for key in results.get('mask_fields', []):

padded_masks = [

mmcv.impad(mask, pad_shape, pad_val=self.pad_val)

for mask in results[key]

]

if padded_masks:

results[key] = np.stack(padded_masks, axis=0)

else:

results[key] = np.empty((0, ) + pad_shape, dtype=np.uint8)

def _pad_seg(self, results):

for key in results.get('seg_fields', []):

results[key] = mmcv.impad(results[key], results['pad_shape'][:2])

def __call__(self, results):

self._pad_img(results)

self._pad_masks(results)

self._pad_seg(results)

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(size={}, size_divisor={}, pad_val={})'.format(

self.size, self.size_divisor, self.pad_val)

return repr_str

@PIPELINES.register_module

class Normalize(object):

"""Normalize the image.

Args:

mean (sequence): Mean values of 3 channels.

std (sequence): Std values of 3 channels.

to_rgb (bool): Whether to convert the image from BGR to RGB,

default is true.

"""

def __init__(self, mean, std, to_rgb=True):

self.mean = np.array(mean, dtype=np.float32)

self.std = np.array(std, dtype=np.float32)

self.to_rgb = to_rgb

def __call__(self, results):

if results['concat']:

img_raw, img_temp = results['img'][:, :, :3], results['img'][:, :, 3:]

img_raw = mmcv.imnormalize(img_raw, self.mean, self.std,

self.to_rgb)

img_temp = mmcv.imnormalize(img_temp, self.mean, self.std,

self.to_rgb)

results['img'] = np.concatenate([img_raw, img_temp], axis=2)

results['img_norm_cfg'] = dict(

mean=self.mean, std=self.std, to_rgb=self.to_rgb)

else:

results['img'] = mmcv.imnormalize(results['img'], self.mean, self.std,

self.to_rgb)

results['img_norm_cfg'] = dict(

mean=self.mean, std=self.std, to_rgb=self.to_rgb)

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(mean={}, std={}, to_rgb={})'.format(

self.mean, self.std, self.to_rgb)

return repr_str

@PIPELINES.register_module

class RandomCrop(object):

"""Random crop the image & bboxes & masks.

Args:

crop_size (tuple): Expected size after cropping, (h, w).

"""

def __init__(self, crop_size):

self.crop_size = crop_size

def __call__(self, results):

img = results['img']

margin_h = max(img.shape[0] - self.crop_size[0], 0)

margin_w = max(img.shape[1] - self.crop_size[1], 0)

offset_h = np.random.randint(0, margin_h + 1)

offset_w = np.random.randint(0, margin_w + 1)

crop_y1, crop_y2 = offset_h, offset_h + self.crop_size[0]

crop_x1, crop_x2 = offset_w, offset_w + self.crop_size[1]

# crop the image

img = img[crop_y1:crop_y2, crop_x1:crop_x2, :]

img_shape = img.shape

results['img'] = img

results['img_shape'] = img_shape

# crop bboxes accordingly and clip to the image boundary

for key in results.get('bbox_fields', []):

bbox_offset = np.array([offset_w, offset_h, offset_w, offset_h],

dtype=np.float32)

bboxes = results[key] - bbox_offset

bboxes[:, 0::2] = np.clip(bboxes[:, 0::2], 0, img_shape[1] - 1)

bboxes[:, 1::2] = np.clip(bboxes[:, 1::2], 0, img_shape[0] - 1)

results[key] = bboxes

# crop semantic seg

for key in results.get('seg_fields', []):

results[key] = results[key][crop_y1:crop_y2, crop_x1:crop_x2]

# filter out the gt bboxes that are completely cropped

if 'gt_bboxes' in results:

gt_bboxes = results['gt_bboxes']

valid_inds = (gt_bboxes[:, 2] > gt_bboxes[:, 0]) & (

gt_bboxes[:, 3] > gt_bboxes[:, 1])

# if no gt bbox remains after cropping, just skip this image

if not np.any(valid_inds):

return None

results['gt_bboxes'] = gt_bboxes[valid_inds, :]

if 'gt_labels' in results:

results['gt_labels'] = results['gt_labels'][valid_inds]

# filter and crop the masks

if 'gt_masks' in results:

valid_gt_masks = []

for i in np.where(valid_inds)[0]:

gt_mask = results['gt_masks'][i][crop_y1:crop_y2,

crop_x1:crop_x2]

valid_gt_masks.append(gt_mask)

results['gt_masks'] = valid_gt_masks

return results

def __repr__(self):

return self.__class__.__name__ + '(crop_size={})'.format(

self.crop_size)

@PIPELINES.register_module

class SegRescale(object):

"""Rescale semantic segmentation maps.

Args:

scale_factor (float): The scale factor of the final output.

"""

def __init__(self, scale_factor=1):

self.scale_factor = scale_factor

def __call__(self, results):

for key in results.get('seg_fields', []):

if self.scale_factor != 1:

results[key] = mmcv.imrescale(

results[key], self.scale_factor, interpolation='nearest')

return results

def __repr__(self):

return self.__class__.__name__ + '(scale_factor={})'.format(

self.scale_factor)

@PIPELINES.register_module

class PhotoMetricDistortion(object):

"""Apply photometric distortion to image sequentially, every transformation

is applied with a probability of 0.5. The position of random contrast is in

second or second to last.

1. random brightness

2. random contrast (mode 0)

3. convert color from BGR to HSV

4. random saturation

5. random hue

6. convert color from HSV to BGR

7. random contrast (mode 1)

8. randomly swap channels

Args:

brightness_delta (int): delta of brightness.

contrast_range (tuple): range of contrast.

saturation_range (tuple): range of saturation.

hue_delta (int): delta of hue.

"""

def __init__(self,

brightness_delta=32,

contrast_range=(0.5, 1.5),

saturation_range=(0.5, 1.5),

hue_delta=18):

self.brightness_delta = brightness_delta

self.contrast_lower, self.contrast_upper = contrast_range

self.saturation_lower, self.saturation_upper = saturation_range

self.hue_delta = hue_delta

def __call__(self, results):

img = results['img']

# random brightness

if random.randint(2):

delta = random.uniform(-self.brightness_delta,

self.brightness_delta)

img += delta

# mode == 0 --> do random contrast first

# mode == 1 --> do random contrast last

mode = random.randint(2)

if mode == 1:

if random.randint(2):

alpha = random.uniform(self.contrast_lower,

self.contrast_upper)

img *= alpha

# convert color from BGR to HSV

img = mmcv.bgr2hsv(img)

# random saturation

if random.randint(2):

img[..., 1] *= random.uniform(self.saturation_lower,

self.saturation_upper)

# random hue

if random.randint(2):

img[..., 0] += random.uniform(-self.hue_delta, self.hue_delta)

img[..., 0][img[..., 0] > 360] -= 360

img[..., 0][img[..., 0] < 0] += 360

# convert color from HSV to BGR

img = mmcv.hsv2bgr(img)

# random contrast

if mode == 0:

if random.randint(2):

alpha = random.uniform(self.contrast_lower,

self.contrast_upper)

img *= alpha

# randomly swap channels

if random.randint(2):

img = img[..., random.permutation(3)]

results['img'] = img

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += ('(brightness_delta={}, contrast_range={}, '

'saturation_range={}, hue_delta={})').format(

self.brightness_delta, self.contrast_range,

self.saturation_range, self.hue_delta)

return repr_str

@PIPELINES.register_module

class Expand(object):

"""Random expand the image & bboxes.

Randomly place the original image on a canvas of 'ratio' x original image

size filled with mean values. The ratio is in the range of ratio_range.

Args:

mean (tuple): mean value of dataset.

to_rgb (bool): if need to convert the order of mean to align with RGB.

ratio_range (tuple): range of expand ratio.

prob (float): probability of applying this transformation

"""

def __init__(self,

mean=(0, 0, 0),

to_rgb=True,

ratio_range=(1, 4),

seg_ignore_label=None,

prob=0.5):

self.to_rgb = to_rgb

self.ratio_range = ratio_range

if to_rgb:

self.mean = mean[::-1]

else:

self.mean = mean

self.min_ratio, self.max_ratio = ratio_range

self.seg_ignore_label = seg_ignore_label

self.prob = prob

def __call__(self, results):

if random.uniform(0, 1) > self.prob:

return results

img, boxes = [results[k] for k in ('img', 'gt_bboxes')]

h, w, c = img.shape

ratio = random.uniform(self.min_ratio, self.max_ratio)

expand_img = np.full((int(h * ratio), int(w * ratio), c),

self.mean).astype(img.dtype)

left = int(random.uniform(0, w * ratio - w))

top = int(random.uniform(0, h * ratio - h))

expand_img[top:top + h, left:left + w] = img

boxes = boxes + np.tile((left, top), 2).astype(boxes.dtype)

results['img'] = expand_img

results['gt_bboxes'] = boxes

if 'gt_masks' in results:

expand_gt_masks = []

for mask in results['gt_masks']:

expand_mask = np.full((int(h * ratio), int(w * ratio)),

0).astype(mask.dtype)

expand_mask[top:top + h, left:left + w] = mask

expand_gt_masks.append(expand_mask)

results['gt_masks'] = expand_gt_masks

# not tested

if 'gt_semantic_seg' in results:

assert self.seg_ignore_label is not None

gt_seg = results['gt_semantic_seg']

expand_gt_seg = np.full((int(h * ratio), int(w * ratio)),

self.seg_ignore_label).astype(gt_seg.dtype)

expand_gt_seg[top:top + h, left:left + w] = gt_seg

results['gt_semantic_seg'] = expand_gt_seg

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(mean={}, to_rgb={}, ratio_range={}, ' \

'seg_ignore_label={})'.format(

self.mean, self.to_rgb, self.ratio_range,

self.seg_ignore_label)

return repr_str

@PIPELINES.register_module

class MinIoURandomCrop(object):

"""Random crop the image & bboxes, the cropped patches have minimum IoU

requirement with original image & bboxes, the IoU threshold is randomly

selected from min_ious.

Args:

min_ious (tuple): minimum IoU threshold for all intersections with

bounding boxes

min_crop_size (float): minimum crop's size (i.e. h,w := a*h, a*w,

where a >= min_crop_size).

"""

def __init__(self, min_ious=(0.1, 0.3, 0.5, 0.7, 0.9), min_crop_size=0.3):

# 1: return ori img

self.sample_mode = (1, *min_ious, 0)

self.min_crop_size = min_crop_size

def __call__(self, results):

img, boxes, labels = [

results[k] for k in ('img', 'gt_bboxes', 'gt_labels')

]

h, w, c = img.shape

while True:

mode = random.choice(self.sample_mode)

if mode == 1:

return results

min_iou = mode

for i in range(50):

new_w = random.uniform(self.min_crop_size * w, w)

new_h = random.uniform(self.min_crop_size * h, h)

# h / w in [0.5, 2]

if new_h / new_w < 0.5 or new_h / new_w > 2:

continue

left = random.uniform(w - new_w)

top = random.uniform(h - new_h)

patch = np.array(

(int(left), int(top), int(left + new_w), int(top + new_h)))

overlaps = bbox_overlaps(

patch.reshape(-1, 4), boxes.reshape(-1, 4)).reshape(-1)

if overlaps.min() < min_iou:

continue

# center of boxes should inside the crop img

center = (boxes[:, :2] + boxes[:, 2:]) / 2

mask = ((center[:, 0] > patch[0]) * (center[:, 1] > patch[1]) *

(center[:, 0] < patch[2]) * (center[:, 1] < patch[3]))

if not mask.any():

continue

boxes = boxes[mask]

labels = labels[mask]

# adjust boxes

img = img[patch[1]:patch[3], patch[0]:patch[2]]

boxes[:, 2:] = boxes[:, 2:].clip(max=patch[2:])

boxes[:, :2] = boxes[:, :2].clip(min=patch[:2])

boxes -= np.tile(patch[:2], 2)

results['img'] = img

results['gt_bboxes'] = boxes

results['gt_labels'] = labels

if 'gt_masks' in results:

valid_masks = [

results['gt_masks'][i] for i in range(len(mask))

if mask[i]

]

results['gt_masks'] = [

gt_mask[patch[1]:patch[3], patch[0]:patch[2]]

for gt_mask in valid_masks

]

# not tested

if 'gt_semantic_seg' in results:

results['gt_semantic_seg'] = results['gt_semantic_seg'][

patch[1]:patch[3], patch[0]:patch[2]]

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(min_ious={}, min_crop_size={})'.format(

self.min_ious, self.min_crop_size)

return repr_str

@PIPELINES.register_module

class Corrupt(object):

def __init__(self, corruption, severity=1):

self.corruption = corruption

self.severity = severity

def __call__(self, results):

results['img'] = corrupt(

results['img'].astype(np.uint8),

corruption_name=self.corruption,

severity=self.severity)

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(corruption={}, severity={})'.format(

self.corruption, self.severity)

return repr_str

@PIPELINES.register_module

class Albu(object):

def __init__(self,

transforms,

bbox_params=None,

keymap=None,

update_pad_shape=False,

skip_img_without_anno=False):

"""

Adds custom transformations from Albumentations lib.

Please, visit `https://albumentations.readthedocs.io`

to get more information.

transforms (list): list of albu transformations

bbox_params (dict): bbox_params for albumentation `Compose`

keymap (dict): contains {'input key':'albumentation-style key'}

skip_img_without_anno (bool): whether to skip the image

if no ann left after aug

"""

self.transforms = transforms

self.filter_lost_elements = False

self.update_pad_shape = update_pad_shape

self.skip_img_without_anno = skip_img_without_anno

# A simple workaround to remove masks without boxes

if (isinstance(bbox_params, dict) and 'label_fields' in bbox_params

and 'filter_lost_elements' in bbox_params):

self.filter_lost_elements = True

self.origin_label_fields = bbox_params['label_fields']

bbox_params['label_fields'] = ['idx_mapper']

del bbox_params['filter_lost_elements']

self.bbox_params = (

self.albu_builder(bbox_params) if bbox_params else None)

self.aug = Compose([self.albu_builder(t) for t in self.transforms],

bbox_params=self.bbox_params)

if not keymap:

self.keymap_to_albu = {

'img': 'image',

'gt_masks': 'masks',

'gt_bboxes': 'bboxes'

}

else:

self.keymap_to_albu = keymap

self.keymap_back = {v: k for k, v in self.keymap_to_albu.items()}

def albu_builder(self, cfg):

"""Import a module from albumentations.

Inherits some of `build_from_cfg` logic.

Args:

cfg (dict): Config dict. It should at least contain the key "type".

Returns:

obj: The constructed object.

"""

assert isinstance(cfg, dict) and "type" in cfg

args = cfg.copy()

obj_type = args.pop("type")

if mmcv.is_str(obj_type):

obj_cls = getattr(albumentations, obj_type)

elif inspect.isclass(obj_type):

obj_cls = obj_type

else:

raise TypeError(

'type must be a str or valid type, but got {}'.format(

type(obj_type)))

if 'transforms' in args:

args['transforms'] = [

self.albu_builder(transform)

for transform in args['transforms']

]

return obj_cls(**args)

@staticmethod

def mapper(d, keymap):

"""

Dictionary mapper.

Renames keys according to keymap provided.

Args:

d (dict): old dict

keymap (dict): {'old_key':'new_key'}

Returns:

dict: new dict.

"""

updated_dict = {}

for k, v in zip(d.keys(), d.values()):

new_k = keymap.get(k, k)

updated_dict[new_k] = d[k]

return updated_dict

def __call__(self, results):

# dict to albumentations format

results = self.mapper(results, self.keymap_to_albu)

if 'bboxes' in results:

# to list of boxes

if isinstance(results['bboxes'], np.ndarray):

results['bboxes'] = [x for x in results['bboxes']]

# add pseudo-field for filtration

if self.filter_lost_elements:

results['idx_mapper'] = np.arange(len(results['bboxes']))

results = self.aug(**results)

if 'bboxes' in results:

if isinstance(results['bboxes'], list):

results['bboxes'] = np.array(

results['bboxes'], dtype=np.float32)

# filter label_fields

if self.filter_lost_elements:

results['idx_mapper'] = np.arange(len(results['bboxes']))

for label in self.origin_label_fields:

results[label] = np.array(

[results[label][i] for i in results['idx_mapper']])

if 'masks' in results:

results['masks'] = [

results['masks'][i] for i in results['idx_mapper']

]

if (not len(results['idx_mapper'])

and self.skip_img_without_anno):

return None

if 'gt_labels' in results:

if isinstance(results['gt_labels'], list):

results['gt_labels'] = np.array(results['gt_labels'])

# back to the original format

results = self.mapper(results, self.keymap_back)

# update final shape

if self.update_pad_shape:

results['pad_shape'] = results['img'].shape

return results

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += '(transformations={})'.format(self.transformations)

return repr_str

mmdet/datasets/pipelines/init.py,修改后的源码如下:

init.py

此代码为引入albumentations数据增强库进行增强

具体修改方式如下:添加dict(type=‘Albu’, transforms = [{“type”: ‘RandomRotate90’}]),其他的类似。

from .compose import Compose

from .formating import (Collect, ImageToTensor, ToDataContainer, ToTensor,

Transpose, to_tensor)

from .loading import LoadAnnotations, LoadImageFromFile, LoadProposals

from .test_aug import MultiScaleFlipAug

from .transforms import (Albu, Expand, MinIoURandomCrop, Normalize, Pad,

PhotoMetricDistortion, RandomCrop, RandomFlip, Resize,

SegRescale)

__all__ = [

'Compose', 'to_tensor', 'ToTensor', 'ImageToTensor', 'ToDataContainer',

'Transpose', 'Collect', 'LoadAnnotations', 'LoadImageFromFile',

'LoadProposals', 'MultiScaleFlipAug', 'Resize', 'RandomFlip', 'Pad',

'RandomCrop', 'Normalize', 'SegRescale', 'MinIoURandomCrop', 'Expand',

'PhotoMetricDistortion', 'Albu'

]

具体修改方式如下:添加dict(type=‘Albu’, transforms = [{“type”: ‘RandomRotate90’}]),其他的类似。

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Albu', transforms = [{"type": 'RandomRotate90'}]),# 数据增强

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

]

目前的transform v2

from .auto_augment import (AutoAugment, BrightnessTransform, ColorTransform,

ContrastTransform, EqualizeTransform, Rotate, Shear,

Translate)

from .compose import Compose

from .formating import (Collect, DefaultFormatBundle, ImageToTensor,

ToDataContainer, ToTensor, Transpose, to_tensor)

from .instaboost import InstaBoost

from .loading import (LoadAnnotations, LoadImageFromFile, LoadImageFromWebcam,

LoadMultiChannelImageFromFiles, LoadProposals)

from .test_time_aug import MultiScaleFlipAug

from .transforms import (Albu, CutOut, Expand, MinIoURandomCrop, Normalize,

Pad, PhotoMetricDistortion, RandomCenterCropPad,

RandomCrop, RandomFlip, Resize, SegRescale)

__all__ = [

'Compose', 'to_tensor', 'ToTensor', 'ImageToTensor', 'ToDataContainer',

'Transpose', 'Collect', 'DefaultFormatBundle', 'LoadAnnotations',

'LoadImageFromFile', 'LoadImageFromWebcam',

'LoadMultiChannelImageFromFiles', 'LoadProposals', 'MultiScaleFlipAug',

'Resize', 'RandomFlip', 'Pad', 'RandomCrop', 'Normalize', 'SegRescale',

'MinIoURandomCrop', 'Expand', 'PhotoMetricDistortion', 'Albu',

'InstaBoost', 'RandomCenterCropPad', 'AutoAugment', 'CutOut', 'Shear',

'Rotate', 'ColorTransform', 'EqualizeTransform', 'BrightnessTransform',

'ContrastTransform', 'Translate'

]

2、MMDetection自带数据增强

源码在mmdet/datasets/extra_aug.py里面,包括RandomCrop、brightness、contrast、saturation、ExtraAugmentation等等图像增强方法。

添加config位置是train_pipeline或test_pipeline这个地方(一般train进行增强而test不需要),例如数据增强RandomFlip,flip_ratio代表随机翻转的概率:

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

3、Bbox增强

albumentations数据增强(同上)

源码在mmdet/datasets/custom.py里面,增强源码为

def pre_pipeline(self, results):

results['img_prefix'] = self.img_prefix

results['seg_prefix'] = self.seg_prefix

results['proposal_file'] = self.proposal_file

results['bbox_fields'] = []

results['mask_fields'] = []

配置文件增加的数据增强

configs/base/datasets/coco_detection.py 在train pipeline修改Data Augmentation在train

dataset_type = 'CocoDataset'

data_root = 'data/coco/'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

# 在这里加albumentation的aug

albu_train_transforms = [

dict(

type='ShiftScaleRotate',

shift_limit=0.0625,

scale_limit=0.0,

rotate_limit=0,

interpolation=1,

p=0.5),

dict(

type='RandomBrightnessContrast',

brightness_limit=[0.1, 0.3],

contrast_limit=[0.1, 0.3],

p=0.2),

dict(

type='OneOf',

transforms=[

dict(

type='RGBShift',

r_shift_limit=10,

g_shift_limit=10,

b_shift_limit=10,

p=1.0),

dict(

type='HueSaturationValue',

hue_shift_limit=20,

sat_shift_limit=30,

val_shift_limit=20,

p=1.0)

],

p=0.1),

dict(type='JpegCompression', quality_lower=85, quality_upper=95, p=0.2),

dict(type='ChannelShuffle', p=0.1),

dict(

type='OneOf',

transforms=[

dict(type='Blur', blur_limit=3, p=1.0),

dict(type='MedianBlur', blur_limit=3, p=1.0)

],

p=0.1),

]

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

#据说这里改img_scale即可多尺度训练,但是实际运行报错。

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='Pad', size_divisor=32),

dict(

type='Albu',

transforms=albu_train_transforms,

bbox_params=dict(

type='BboxParams',

format='pascal_voc',

label_fields=['gt_labels'],

min_visibility=0.0,

filter_lost_elements=True),

keymap={

'img': 'image',

'gt_masks': 'masks',

'gt_bboxes': 'bboxes'

},

]

# 测试的pipeline

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

# 多尺度测试 TTA在这里修改,注意有些模型不支持多尺度TTA,比如cascade_mask_rcnn,若不支持会提示

# Unimplemented Error

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

# 包含batch_size, workers和路径。

# 路径如果按照上面的设置好就不需要更改

data = dict(

samples_per_gpu=2,

workers_per_gpu=2,

train=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_train2017.json',

img_prefix=data_root + 'train2017/',

pipeline=train_pipeline),

val=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_val2017.json',

img_prefix=data_root + 'val2017/',

pipeline=test_pipeline),

test=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_val2017.json',

img_prefix=data_root + 'val2017/',

pipeline=test_pipeline))

evaluation = dict(interval=1, metric='bbox')